Teknik Produksi Mesin

Pada bidang keahlian teknik produksi mesin (manufacturing) mempelajari cara-cara memanufaktur suatu produk atau komponen mesin yang ditinjau dari segi teknik, proses maupun manajemennya, yang merupakan pengembangan dari mata kuliah antara lain seperti proses Manufaktur, Perencanaan Mesin Perkakas, Manajemen Operasi dan Produksi serta Perancangan Untuk Manufaktur.

Manufacturing Engineering

Manufacturing is a field of engineering that generally deals with different practices of manufacturing; the research and development of processes, machines and equipment. It also deals with the integration of different facilities and the systems for producing quality products (with optimal expenditure) by applying the principles of physics and the study of manufacturing systems; such as the following:

- Craft or Guild system

- Putting-out system

- English system of manufacturing

- American system of manufacturing

- Soviet collectivism in manufacturing

- Mass production

- Computer Integrated Manufacturing

- Computer-aided technologies in manufacturing

- Just In Time manufacturing

- Lean manufacturing

- Flexible manufacturing

- Mass customization

- Agile manufacturing

- Rapid manufacturing

- Prefabrication

- Ownership

- Fabrication

- Publication

|

| A set of six-axis robots used for welding |

Manufacturing engineers work on the development and creation of physical artifacts, production

processes, and technology. The manufacturing engineering discipline has very strong overlaps with mechanical engineering, industrial engineering, electrical engineering, electronic engineering, computer science, materials management, and operations management. Their success or failure directly impacts the advancement of technology and the spread of innovation.It is a very broad area which includes the design and development of products.This field of engineering first became noticed in the mid to late 20th century , when industrialized countries introduced factories with :

1. Advanced statistical methods of quality control were introduced in factories , pioneered by the American mathematician William Edwards Deming, whom his home country initially ignored.The same methods of quality control later turned Japanese factories into world leaders in cost-effectiveness and production quality.

2. Industrial robots on the factory floor, introduced in the late 1970s. These computer-controlled welding arms and grippers could perform simple tasks such as attaching a car door quickly and flawlessly 24 hours a day. This cut costs and improved speed.

Modern developments

Modern manufacturing engineering studies includes all intermediate processes required for the production and integration of a product's components.

Some industries, such as semiconductor and steel manufacturers use the term fabrication instead for these topics.

Automation is used in different processes of manufacturing like machining,welding etc. Automated manufacturing refers to the application of automation to produce things in the factory way. Most of the advantages of the automation technology has its influence in the manufacture processes.The main advantage of the automated manufacturing are: higher consistency and quality, reduce the lead times, simplification of production, reduce handling, improve work flow and increase the morale of workers when a good implementation of the automation is made.

Robotics is the application of mechatronics and automation to create robots, which are often used in manufacturing to perform tasks that are dangerous, unpleasant, or repetitive. These robots may be of any shape and size, but all are preprogrammed and interact physically with the world. To create a robot, an engineer typically employs kinematics (to determine the robot's range of motion) and mechanics (to determine the stresses within the robot).Robots are used extensively in manufacturing engineering.

They allow businesses to save money on labor, perform tasks that are either too dangerous or too precise for humans to perform them economically, and to insure better quality. Many companies employ assembly lines of robots, and some factories are so robotized that they can run by themselves. Outside the factory, robots have been employed in bomb disposal, space exploration, and many other fields. Robots are also sold for various residential applications.

Education

Certification Programs in Manufacturing Engineering

Manufacturing engineers possess a Bachelor degree in engineering with major in manufacturing engineering. The length of the study for such a degree is usually four to five years and 5 more years of professional practice to qualify as a professional engineer. Manufacturing engineering technologists is a more applied qualification path.

The degrees for manufacturing engineer are usually designated as a Bachelor of Engineering [BE] or [BEng], Bachelor of Science [BS] or [BSc], and for manufacturing technologist they are Bachelor of Technology [B.TECH] or Bachelor of Applied Science [BASc] in manufacturing depending upon the university. Masters degree include Master of Engineering [ME] or [MEng] in Manufacturing, master of science [M.Sc] in manufacturing management, master of science [M.Sc] in industrial and production management, masters of science [M.Sc] as well as masters of engineering [ME] in design, which is a sub-discipline of manufacturing are available. Doctoral [PhD] or [DEng] level courses in manufacturing are also available depending on the university.

The undergraduate degree curriculum generally includes units covering physics, mathematics, computer science, project management and specific topics in mechanical and manufacturing engineering. Initially such topics cover most, if not all, of the sub-disciplines of manufacturing engineering. Students then choose to specialize in one or more sub-disciplines towards the end of the degree.

Syllabus

The curriculum for the bachelors degree in manufacturing is very similar to that of mechanical engineering,which includes :

- Statics and dynamics

- Strength of materials and solid mechanics

- Instrumentation and measurement

- Thermodynamics, heat transfer, energy conversion, and HVAC

- Fluid mechanics and fluid dynamics

- Mechanism design (including kinematics and dynamics)

- Manufacturing technology or processes

- Hydraulics and pneumatics

- Mathematics - in particular, calculus, differential equations, statistics, and linear algebra.

- Engineering design

- Mechatronics and control theory

- Material Engineering

- Drafting, CAD (including solid modeling), and CAM etc.

A bachelor's degree in these two areas will typically have a difference of a few specialized classes only, with the exception that the Mechanical Engineering Degree is much more math intensive.

Manufacturing Engineering Certifications

Certification and Licensure:

Professional Engineer is the term for registered or licensed engineers in some countries who are permitted to offer their professional services directly to the public. Professional Engineer abbreviation (PE - USA) or (PEng - Canada) are the designations for Licensure in North America. In order to qualify for a Professional Engineer license, a candidate needs a bachelor's degree from an ABET recognized university in the USA a passing score on a state examination, and four years of work experience usually gained via a structured internship. More recent graduates have the option of dividing this licensure process in the USA into two segments. The Fundamentals of Engineering (FE) exam is often taken immediately after graduation and the Principles and Practice of Engineering exam is taken after four years of working in a chosen engineering field.

Society of Manufacturing Engineers (SME) Certifications (USA)

The SME administers qualifications specifically for the manufacturing industry. These are not degree level qualifications and are not recognized at professional engineering level. The following discussion deals with qualifications in the USA only. Qualified candidates for the Certified Manufacturing Technologist Certificate (CMfgT) must pass a three-hour, 130-question multiple-choice exam. The exam covers math, manufacturing processes, manufacturing management, automation, and related subjects. Additionally, a candidate must have at least four years of combined education and manufacturing-related work experience.

Certified Manufacturing Engineering (CMfgE) is a qualification administered by the Society of Manufacturing Engineers, Dearborn Michigan, USA. Candidates qualifying for the Certified Manufacturing Engineering Certificate must pass a three-hour, 150 question multiple-choice exam which covers more in-depth topics than the CMfgT exam. CMfgE candidates must also have eight years of combined education and manufacturing-related work experience, with a minimum of four years of work experience.

Certified Engineering Manager (CEM). The Certified Engineering Manager Certificate is also designed for engineers with eight years of combined education and manufacturing experience. The test is four hours long and has 160 multiple-choice questions. The CEM certification exam covers business processes, teamwork, responsibility and other management-related categories.

Modern tools

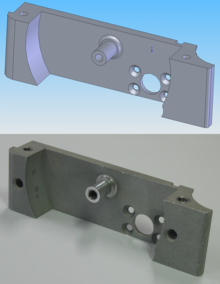

Many manufacturing companies, especially those in industrialized nations, have begun to incorporate

|

| CAD model and CNC machined part |

computer-aided engineering (CAE) programs into their existing design and analysis processes, including 2D and 3D solid modeling computer-aided design (CAD). This method has many benefits, including easier and more exhaustive visualization of products, the ability to create virtual assemblies of parts, and the ease of use in designing mating interfaces and tolerances.

Other CAE programs commonly used by product manufacturers include product lifecycle management (PLM) tools and analysis tools used to perform complex simulations. Analysis tools may be used to predict product response to expected loads, including fatigue life and manufacturability. These tools include finite element analysis (FEA), computational fluid dynamics (CFD), and computer-aided manufacturing (CAM).

Using CAE programs, a mechanical design team can quickly and cheaply iterate the design process to develop a product that better meets cost, performance, and other constraints. No physical prototype need be created until the design nears completion, allowing hundreds or thousands of designs to be evaluated, instead of a relative few. In addition, CAE analysis programs can model complicated physical phenomena which cannot be solved by hand, such as viscoelasticity, complex contact between mating parts, or non-Newtonian flows

As manufacturing engineering is linked with other disciplines, as seen in mechatronics, multidisciplinary design optimization (MDO) is being used with other CAE programs to automate and improve the iterative design process. MDO tools wrap around existing CAE processes, allowing product evaluation to continue even after the analyst goes home for the day. They also utilize sophisticated optimization algorithms to more intelligently explore possible designs, often finding better, innovative solutions to difficult multidisciplinary design problems.

Sub-disciplines

Mechanics

|

| Mohr's circle, a common tool to study stresses in a mechanical element |

Mechanics is, in the most general sense, the study of forces and their effect upon matter. Typically, engineering mechanics is used to analyze and predict the acceleration and deformation (both elastic and plastic) of objects under known forces (also called loads) or stresses. Subdisciplines of mechanics include

- Statics, the study of non-moving bodies under known loads

- Dynamics (or kinetics), the study of how forces affect moving bodies

- Mechanics of materials, the study of how different materials deform under various types of stress

- Fluid mechanics, the study of how fluids react to forces[20]

- Continuum mechanics, a method of applying mechanics that assumes that objects are continuous (rather than discrete)

If the engineering project were the design of a vehicle, statics might be employed to design the frame of the vehicle, in order to evaluate where the stresses will be most intense. Dynamics might be used when designing the car's engine, to evaluate the forces in the pistons and cams as the engine cycles. Mechanics of materials might be used to choose appropriate materials for the manufacture of the frame and engine. Fluid mechanics might be used to design a ventilation system for the vehicle, or to design the intake system for the engine.

Kinematics

Kinematics is the study of the motion of bodies (objects) and systems (groups of objects), while ignoring the forces that cause the motion. The movement of a crane and the oscillations of a piston in an engine are both simple kinematic systems. The crane is a type of open kinematic chain, while the piston is part of a closed four-bar linkage. Engineers typically use kinematics in the design and analysis of mechanisms. Kinematics can be used to find the possible range of motion for a given mechanism, or, working in reverse, can be used to design a mechanism that has a desired range of motion.

Drafting

Drafting or technical drawing is the means by which manufacturers create instructions for manufacturing

|

| A CAD model of a mechanical double seal |

parts. A technical drawing can be a computer model or hand-drawn schematic showing all the dimensions necessary to manufacture a part, as well as assembly notes, a list of required materials, and other pertinent information. A U.S engineer or skilled worker who creates technical drawings may be referred to as a drafter or draftsman. Drafting has historically been a two-dimensional process, but computer-aided design (CAD) programs now allow the designer to create in three dimensions.

Instructions for manufacturing a part must be fed to the necessary machinery, either manually, through programmed instructions, or through the use of a computer-aided manufacturing (CAM) or combined CAD/CAM program. Optionally, an engineer may also manually manufacture a part using the technical drawings, but this is becoming an increasing rarity, with the advent of computer numerically controlled (CNC) manufacturing. Engineers primarily manually manufacture parts in the areas of applied spray coatings, finishes, and other processes that cannot economically or practically be done by a machine.

Drafting is used in nearly every sub-discipline of mechanical,manufacturing engineering, and by many other branches of engineering and architecture. Three-dimensional models created using CAD software are also commonly used in finite element analysis (FEA) and computational fluid dynamics (CFD).

Numerical control

Numerical control (NC) refers to the automation of machine tools that are operated by abstractl

|

| A CNC Turning Center |

programmed commands encoded on a storage medium, as opposed to manually controlled via handwheels

or levers, or mechanically automated via cams alone. The first NC machines were built in the 1940s and 1950s, based on existing tools that were modified with motors that moved the controls to follow points fed into the system on punched tape. These early servomechanisms were rapidly augmented with analog and digital computers, creating the modern computer numerical control (CNC) machine tools that have revolutionized the machining processes.

In modern CNC systems, end-to-end component design is highly

automated using computer-aided design (CAD) and computer-aided manufacturing (CAM) programs. The programs produce a computer file that is interpreted to extract the commands needed to operate a particular machine via a postprocessor, and then loaded into the CNC machines for production. Since any particular component might require the use of a number of different tools-drills, saws, etc., modern machines often combine multiple tools into a single "cell". In other cases, a number of different machines are used with an external controller and human or robotic operators that move the component from machine to machine. In either case, the complex series of steps needed to produce any part is highly automated and produces a part that closely matches the original CAD design.

History

Earlier forms of automation

Cams

The automation of machine tool control began in the 19th century with cams that "played" a machine tool in the way that cams had long been playing musical boxes or operating elaborate cuckoo clocks. Thomas Blanchard built his gun-stock-copying lathes (1820s-30s), and the work of people such as Christopher Miner Spencer developed the turret lathe into the screw machine (1870s). Cam-based automation had already reached a highly advanced state by World War I (1910s).

However, automation via cams is fundamentally different from numerical control because it cannot be abstractly programmed. Cams can encode information, but getting the information from the abstract level of an engineering drawing into the cam is a manual process that requires sculpting and/or machining and filing.

Various forms of abstractly programmable control had existed during the 19th century: those of the Jacquard loom, player pianos, and mechanical computers pioneered by Charles Babbage and others. These developments had the potential for convergence with the automation of machine tool control starting in that century, but the convergence did not happen until many decades later.

Tracer control

The application of hydraulics to cam-based automation resulted in tracing machines that used a stylus to trace a template, such as the enormous Pratt & Whitney "Keller Machine", which could copy templates several feet across. Another approach was "record and playback", pioneered at General Motors (GM) in the 1950s, which used a storage system to record the movements of a human machinist, and then play them back on demand. Analogous systems are common even today, notably the "teaching lathe" which gives new machinists a hands-on feel for the process. None of these were numerically programmable, however, and required a master machinist at some point in the process, because the "programming" was physical rather than numerical.

Servos and selsyns

One barrier to complete automation was the required tolerances of the machining process, which are routinely on the order of thousandths of an inch. Although connecting some sort of control to a storage device like punched cards was easy, ensuring that the controls were moved to the correct position with the required accuracy was another issue. The movement of the tool resulted in varying forces on the controls that would mean a linear input would not result in linear tool motion. The key development in this area was the introduction of the servomechanism, which produced highly accurate measurement information. Attaching two servos together produced a selsyn, where a remote servo's motions were accurately matched by another. Using a variety of mechanical or electrical systems, the output of the selsyns could be read to ensure proper movement had occurred (in other words, forming a closed-loop control system).

The first serious suggestion that selsyns could be used for machining control was made by Ernst F. W. Alexanderson, a Swedish immigrant to the U.S. working at General Electric (GE). Alexanderson had worked on the problem of torque amplification that allowed the small output of a mechanical computer to drive very large motors, which GE used as part of a larger gun laying system for US Navy ships. Like machining, gun laying requires very high accuracies, much less than a degree, and the forces during the motion of the gun turrets was non-linear. In November 1931 Alexanderson suggested to the Industrial Engineering Department that the same systems could be used to drive the inputs of machine tools, allowing it to follow the outline of a template without the strong physical contact needed by existing tools like the Keller Machine. He stated that it was a "matter of straight engineering development". However, the concept was ahead of its time from a business development perspective, and GE did not take the matter seriously until years later, when others had pioneered the field.

Parsons and the invention of NC

The birth of NC is generally credited to John T. Parsons, a machinist and salesman at his father's machining company, Parsons Corp.

In 1942 he was told that helicopters were going to be the "next big thing" by the former head of Ford Trimotor production, Bill Stout. He called Sikorsky Aircraft to inquire about possible work, and soon got a contract to build the wooden stringers in the rotor blades. After setting up production at a disused furniture factory and ramping up production, one of the blades failed and it was traced to the spar. As at least some of the problem appeared to stem from spot welding a metal collar on the stringer to the metal spar, so Parsons suggested a new method of attaching the stringers to the spar using adhesives, never before tried on an aircraft design.

That development led Parsons to consider the possibility of using stamped metal stringers instead of wood, which would be much stronger and easier to make. The stringers for the rotors were built from a design provided by Sikorsky, which was sent to Parsons as a series of 17 points defining the outline. Parsons then had to "fill in" the dots with a french curve to generate an outline they could use as a template to build the jigs for the wooden stringers. Making a metal cutting tool able to cut that particular shape proved to be difficult. Parsons went to Wright Field to see Frank Stulen, the head of the Propeller Lab Rotary Ring Branch. During their conversation, Stulen concluded that Parsons didn't really know what he was talking about. Parsons realized this, and hired Stulen on the spot. Stulen started work on 1 April 1946 and hired three new engineers to join him.

Stulen's brother worked at Curtis Wright Propeller, and mentioned that they were using punched card calculators for engineering calculations. Stulen decided to adopt the idea to run stress calculations on the rotors, the first detailed automated calculations on helicopter rotors. When Parsons saw what Stulen was doing with the punched card machines, he asked Stulen if they could be used to generate an outline with 200 points instead of the 17 they were given, and offset each point by the radius of a mill cutting tool. If you cut at each of those points, it would produce a relatively accurate cutout of the stringer even in hard steel, and it could easily be filed down to a smooth shape. The resulting tool would be useful as a template for stamping metal stringers. Stullen had no problem making such a program, and used it to produce large tables of numbers that would be taken onto the machine floor. Here, one operator read the numbers off the charts to two other operators, one on each of the X- and Y- axes, and they would move the cutting head to that point and make a cut.[4] This was called the "by-the-numbers method".

At that point Parsons conceived of a fully automated tool. With enough points on the outline, no manual working would be needed, but with manual operation, the time saved by having the part more closely match the outline was offset by the time needed to move the controls. If the machine's inputs were attached directly to the card reader, this delay, and any associated manual errors, would be removed and the number of points could be dramatically increased. Such a machine could repeatedly punch out perfectly accurate templates on command. But at the time Parsons had no funds to develop his ideas.

When one of Parsons's salesmen was on a visit to Wright Field, he was told of the problems the newly-formed US Air Force was having with new jet designs. He asked if Parsons had anything to help them. Parsons showed Lockheed their idea of an automated mill, but they were uninterested. They decided to use 5-axis template copiers to produce the stringers, cutting from a metal template, and had already ordered the expensive cutting machine. But as Parsons noted:

Now just picture the situation for a minute. Lockheed had contracted to design a machine to make these wings. This machine had five axes of cutter movement, and each of these was tracer controlled using a template. Nobody was using my method of making templates, so just imagine what chance they were going to have of making an accurate airfoil shape with inaccurate templates.

Parsons worries soon came true, and Lockheed's protests that they could fix the problem eventually rang hollow. In 1949 the Air Force arranged funding for Parsons to build his machines on his own. Early work with Snyder Machine & Tool Corp proved the system of directly driving the controls from motors failed to give the accuracy needed to set the machine for a perfectly smooth cut. Since the mechanical controls did not respond in a linear fashion, you couldn't simply drive it with a given amount of power, because the differing forces meant the same amount of power would not always produce the same amount of motion in the controls. No matter how many points you included, the outline would still be rough.

Enter MIT

This was not an impossible problem to solve, but would require some sort of feedback system, like a selsyn, to directly measure how far the controls had actually turned. Faced with the daunting task of building such a system, in the spring of 1949 Parsons turned to Gordon S. Brown's Servomechanisms Laboratory at MIT, which was a world leader in mechanical computing and feedback systems. During the war the Lab had built a number of complex motor-driven devices like the motorized gun turret systems for the Boeing B-29 Superfortress and the automatic tracking system for the SCR-584 radar. They were naturally suited to technological transfer into a prototype of Parsons's automated "by-the-numbers" machine.

The MIT team was led by William Pease assisted by James McDonough. They quickly concluded that Parsons's design could be greatly improved; if the machine did not simply cut at points A and B, but instead moved smoothly between the points, then not only would it make a perfectly smooth cut, but could do so with many fewer points - the mill could cut lines directly instead of having to define a large number of cutting points to "simulate" it. A three-way agreement was arranged between Parsons, MIT, and the Air Force, and the project officially ran from July 1949 to June 1950. The contract called for the construction of two "Card-a-matic Milling Machine"s, a prototype and a production system. Both to be handed to Parsons for attachment to one of their mills in order to develop a deliverable system for cutting stringers.

Instead, in 1950 MIT bought a surplus Cincinnati Milling Machine Company "Hydro-Tel" mill of their own and arranged a new contract directly with the Air Force that froze Parsons out of further development. Parsons would later comment that he "never dreamed that anybody as reputable as MIT would deliberately go ahead and take over my project." In spite of the development being handed to MIT, Parsons filed for a patent on "Motor Controlled Apparatus for Positioning Machine Tool" on 5 May 1952, sparking a filing by MIT for a "Numerical Control Servo-System" on 14 August 1952. Parsons received US Patent 2,820,187 on 14 January 1958, and the company sold an exclusive license to Bendix. IBM, Fujitsu and General Electric all took sub-licenses after having already started development of their own devices.

MIT's machine

MIT fit gears to the various handwheel inputs and drove them with roller chains connected to motors, one for each of the machine's three axes (X, Y, and Z). The associated controller consisted of five refrigerator-sized cabinets that, together, were almost as large as the mill they were connected to. Three of the cabinets contained the motor controllers, one controller for each motor, the other two the digital reading system.

Unlike Parsons's original punched card design, the MIT design used standard 7-track punch tape for input. Three of the tracks were used to control the different axes of the machine, while the other four encoded various control information. The tape was read in a cabinet that also housed six relay-based hardware registers, two for each axis. With every read operation the previously read point was copied into the "starting point" register, and the newly read one into the "ending point". The tape was read continually and the number in the register increased until a "stop" instruction was encountered, four holes in a line.

The final cabinet held a clock that sent pulses through the registers, compared them, and generated output pulses that interpolated between the points. For instance, if the points were far apart the output would have pulses with every clock cycle, whereas closely spaced points would only generate pulses after multiple clock cycles. The pulses are sent into a summing register in the motor controllers, counting up by the number of pulses every time they were received. The summing registers were connected to a digital to analog converter that increased power to the motors as the count in the registers increased.

The registers were decremented by encoders attached to the motors and the mill itself, which would reduce the count by one for every one degree of rotation. Once the second point was reached the pulses from the clock would stop, and the motors would eventually drive the mill to the encoded position. Each 1 degree rotation of the controls produced a 0.0005 inch movement of the cutting head. The programmer could control the speed of the cut by selecting points that were closer together for slow movements, or further apart for rapid ones.

The system was publicly demonstrated in September 1952, appearing in that month's Scientific American. MIT's system was an outstanding success by any technical measure, quickly making any complex cut with extremely high accuracy that could not easily be duplicated by hand. However, the system was terribly complex, including 250 vacuum tubes, 175 relays and numerous moving parts, reducing its reliability in a production environment. It was also very expensive, the total bill presented to the Air Force was $360,000.14, $2,641,727.63 in 2005 dollars. Between 1952 and 1956 the system was used to mill a number of one-off designs for various aviation firms, in order to study their potential economic impact.

Proliferation of NC

The Air Force funding for the project ran out in 1953, but development was picked up by the Giddings and Lewis Machine Tool Co. In 1955 many of the MIT team left to form Concord Controls, a commercial NC company with Giddings' backing, producing the Numericord controller. Numericord was similar to the MIT design, but replaced the punch tape with a magnetic tape reader that General Electric was working on. The tape contained a number of signals of different phases, which directly encoded the angle of the various controls. The tape was played at a constant speed in the controller, which set its half of the selsyn to the encoded angles while the remote side was attached to the machine controls. Designs were still encoded on paper tape, but the tapes were transferred to a reader/writer that converted them into magnetic form. The magtapes could then be used on any of the machines on the floor, where the controllers were greatly reduced in complexity. Developed to produce highly accurate dies for an aircraft skinning press, the Numericord "NC5" went into operation at G&L's plant at Fond du Lac, WI in 1955.

Monarch Machine Tool also developed an numerical controlled lathe, starting in 1952. They demonstrated their machine at the 1955 Chicago Machine Tool Show (predecessor of today's IMTS), along with a number of other vendors with punched card or paper tape machines that were either fully developed or in prototype form. These included Kearney & Trecker’s Milwaukee-Matic II that could change its cutting tool under numerical control, a common feature on modern machines.

A Boeing report noted that "numerical control has proved it can reduce costs, reduce lead times, improve quality, reduce tooling and increase productivity.” In spite of these developments, and glowing reviews from the few users, uptake of NC was relatively slow. As Parsons later noted:

The NC concept was so strange to manufacturers, and so slow to catch on, that the US Army itself finally had to build 120 NC machines and lease them to various manufacturers to begin popularizing its use.

In 1958 MIT published its report on the economics of NC. They concluded that the tools were competitive with human operators, but simply moved the time from the machining to the creation of the tapes. In Forces of Production, Noble claims that this was the whole point as far as the Air Force was concerned; moving the process off of the highly unionized factory floor and into the un-unionized white collar design office. The cultural context of the early 1950s, a second Red Scare with a widespread fear of a bomber gap and of domestic subversion, sheds light on this interpretation. It was strongly feared that the West would lose the defense production race to the Communists, and that syndicalist power was a path toward losing, either by "getting too soft" (less output, greater unit expense) or even by Communist sympathy and subversion within unions (arising from their common theme of empowering the working class).

CNC arrives

Many of the commands for the experimental parts were programmed "by hand" to produce the punch tapes that were used as input. During the development of Whirlwind, MIT's real-time computer, John Runyon coded a number of subroutines to produce these tapes under computer control. Users could enter a list of points and speeds, and the program would generate the punch tape. In one instance, this process reduced the time required to produce the instruction list and mill the part from 8 hours to 15 minutes. This led to a proposal to the Air Force to produce a generalized "programming" language for numerical control, which was accepted in June 1956.

Starting in September, Ross and Pople outlined a language for machine control that was based on points and lines, developing this over several years into the APT programming language. In 1957 the Aircraft Industries Association (AIA) and Air Material Command at Wright-Patterson Air Force Base joined with MIT to standardize this work and produce a fully computer-controlled NC system. On 25 February 1959 the combined team held a press conference showing the results, including a 3D machined aluminum ash tray that was handed out in the press kit.

Meanwhile, Patrick Hanratty was making similar developments at GE as part of their partnership with G&L on the Numericord. His language, PRONTO, beat APT into commercial use when it was released in 1958. Hanratty then went on to develop MICR magnetic ink characters that were used in cheque processing, before moving to General Motors to work on the groundbreaking DAC-1 CAD system.

APT was soon extended to include "real" curves in 2D-APT-II. With its release, MIT reduced its focus on CNC as it moved into CAD experiments. APT development was picked up with the AIA in San Diego, and in 1962, by Illinois Institute of Technology Research. Work on making APT an international standard started in 1963 under USASI X3.4.7, but many manufacturers of CNC machines had their own one-off additions (like PRONTO), so standardization was not completed until 1968, when there were 25 optional add-ins to the basic system.

Just as APT was being released in the early 1960s, a second generation of lower-cost transistorized computers was hitting the market that were able to process much larger volumes of information in production settings. This reduced the cost of implementing a NC system and by the mid 1960s, APT runs accounted for a third of all computer time at large aviation firms.

CAD meets CNC

While the Servomechanisms Lab was in the process of developing their first mill, in 1953, MIT's Mechanical Engineering Department dropped the requirement that undergraduates take courses in drawing. The instructors formerly teaching these programs were merged into the Design Division, where an informal discussion of computerized design started. Meanwhile the Electronic Systems Laboratory, the newly rechristened Servomechanisms Laboratory, had been discussing whether or not design would ever start with paper diagrams in the future.

In January 1959, an informal meeting was held involving individuals from both the Electronic Systems Laboratory and the Mechanical Engineering Department's Design Division. Formal meetings followed in April and May, which resulted in the "Computer-Aided Design Project". In December 1959, the Air Force issued a one year contract to ESL for $223,000 to fund the project, including $20,800 earmarked for 104 hours of computer time at $200 per hour. This proved to be far too little for the ambitious program they had in mind, although their engineering calculation system, AED, was released in March 1965.

In 1959, General Motors started an experimental project to digitize, store and print the many design sketches being generated in the various GM design departments. When the basic concept demonstrated that it could work, they started the DAC-1 project with IBM to develop a production version. One part of the DAC project was the direct conversion of paper diagrams into 3D models, which were then converted into APT commands and cut on milling machines. In November 1963 a trunk lid design moved from 2D paper sketch to 3D clay prototype for the first time. With the exception of the initial sketch, the design-to-production loop had been closed.

Meanwhile, MIT's offsite Lincoln Labs was building computers to test new transistorized designs. The ultimate goal was essentially a transistorized Whirlwind known as TX-2, but in order to test various circuit designs a smaller version known as TX-0 was built first. When construction of TX-2 started, time in TX-0 freed up and this led to a number of experiments involving interactive input and use of the machine's CRT display for graphics. Further development of these concepts led to Ivan Sutherland's groundbreaking Sketchpad program on the TX-2.

Sutherland moved to the University of Utah after his Sketchpad work, but it inspired other MIT graduates to attempt the first true CAD system. It was Electronic Drafting Machine (EDM), sold to Control Data and known as "Digigraphics", that Lockheed used to build production parts for the C-5 Galaxy, the first example of an end-to-end CAD/CNC production system.

By 1970 there were a wide variety of CAD firms including Intergraph, Applicon, Computervision, Auto-trol Technology, UGS Corp. and others, as well as large vendors like CDC and IBM.

Proliferation of CNC

The price of computer cycles fell drastically during the 1960s with the widespread introduction of useful minicomputers. Eventually it became less expensive to handle the motor control and feedback with a computer program than it was with dedicated servo systems. Small computers were dedicated to a single mill, placing the entire process in a small box. PDP-8's and Data General Nova computers were common in these roles. The introduction of the microprocessor in the 1970s further reduced the cost of implementation, and today almost all CNC machines use some form of microprocessor to handle all operations.

The introduction of lower-cost CNC machines radically changed the manufacturing industry. Curves are as easy to cut as straight lines, complex 3-D structures are relatively easy to produce, and the number of machining steps that required human action have been dramatically reduced. With the increased automation of manufacturing processes with CNC machining, considerable improvements in consistency and quality have been achieved with no strain on the operator. CNC automation reduced the frequency of errors and provided CNC operators with time to perform additional tasks. CNC automation also allows for more flexibility in the way parts are held in the manufacturing process and the time required to change the machine to produce different components.

During the early 1970s the Western economies were mired in slow economic growth and rising employment costs, and NC machines started to become more attractive. The major U.S. vendors were slow to respond to the demand for machines suitable for lower-cost NC systems, and into this void stepped the Germans. In 1979, sales of German machines surpassed the U.S. designs for the first time. This cycle quickly repeated itself, and by 1980 Japan had taken a leadership position, U.S. sales dropping all the time. Once sitting in the #1 position in terms of sales on a top-ten chart consisting entirely of U.S. companies in 1971, by 1987 Cincinnati Milacron was in 8th place on a chart heavily dominated by Japanese firms.

Many researchers have commented that the U.S. focus on high-end applications left them in an uncompetitive situation when the economic downturn in the early 1970s led to greatly increased demand for low-cost NC systems. Unlike the U.S. companies, who had focused on the highly profitable aerospace market, German and Japanese manufacturers targeted lower-profit segments from the start and were able to enter the low-cost markets much more easily.

As computing and networking evolved, so did direct numerical control (DNC). Its long-term coexistence with less networked variants of NC and CNC is explained by the fact that individual firms tend to stick with whatever is profitable, and their time and money for trying out alternatives is limited. This explains why machine tool models and tape storage media persist in grandfathered fashion even as the state of the art advances.

DIY, hobby, and personal CNC

Recent developments in small scale CNC have been enabled, in large part, by the Enhanced Machine Controller project from the National Institute of Standards and Technology (NIST), an agency of the US Government's Department of Commerce. EMC is a public domain program operating under the Linux operating system and working on PC based hardware. After the NIST project ended, development continued, leading to EMC2 which is licensed under the GNU General Public License and Lesser GNU General Public License (GPL and LGPL). Derivations of the original EMC software have also led to several proprietary PC based programs notably TurboCNC, and Mach3, as well as embedded systems based on proprietary hardware. The availability of these PC based control programs has led to the development of DIY CNC, allowing hobbyists to build their own using open source hardware designs. The same basic architecture has allowed manufacturers, such as Sherline and Taig, to produce turnkey lightweight desktop milling machines for hobbyists.

The easy availability of PC based software and support information of Mach3, written by Art Fenerty, lets anyone with some time and technical expertise make complex parts for home and prototype use. Fenerty is considered a principal founder of Windows-based PC CNC machining.

Eventually, the homebrew architecture was fully commercialized and used to create larger machinery suitable for commercial and industrial applications. This class of equipment has been referred to as Personal CNC. Parallel to the evolution of personal computers, Personal CNC has its roots in EMC and PC based control, but has evolved to the point where it can replace larger conventional equipment in many instances. As with the Personal Computer, Personal CNC is characterized by equipment whose size, capabilities, and original sales price make it useful for individuals, and which is intended to be operated directly by an end user, often without professional training in CNC technology.

Today

Although modern data storage techniques have moved on from punch tape in almost every other role, tapes are still relatively common in CNC systems. Several reasons explain this. One is easy backward compatibility of existing programs. Companies were spared the trouble of re-writing existing tapes into a new format. Another is the principle, mentioned earlier, that individual firms tend to stick with whatever is profitable, and their time and money for trying out alternatives is limited. A small firm that has found a profitable niche may keep older equipment in service for years because "if it ain't broke [profitability-wise], don't fix it." Competition places natural limits on that approach, as some amount of innovation and continuous improvement eventually becomes necessary, lest competitors be the ones who find the way to the "better mousetrap".

One change that was implemented fairly widely was the switch from paper to mylar tapes, which are much more mechanically robust. Floppy disks, USB flash drives and local area networking have replaced the tapes to some degree, especially in larger environments that are highly integrated.

The proliferation of CNC led to the need for new CNC standards that were not encumbered by licensing or particular design concepts, like APT. A number of different "standards" proliferated for a time, often based around vector graphics markup languages supported by plotters. One such standard has since become very common, the "G-code" that was originally used on Gerber Scientific plotters and then adapted for CNC use. The file format became so widely used that it has been embodied in an EIA standard. In turn, while G-code is the predominant language used by CNC machines today, there is a push to supplant it with STEP-NC, a system that was deliberately designed for CNC, rather than grown from an existing plotter standard.

While G-code is the most common method of programming, some machine-tool/control manufacturers also have invented their own proprietary "conversational" methods of programming, trying to make it easier to program simple parts and make set-up and modifications at the machine easier (such as Mazak's Mazatrol and Hurco). These have met with varying success.

A more recent advancement in CNC interpreters is support of logical commands, known as parametric programming (also known as macro programming). Parametric programs include both device commands as well as a control language similar to BASIC. The programmer can make if/then/else statements, loops, subprogram calls, perform various arithmetic, and manipulate variables to create a large degree of freedom within one program. An entire product line of different sizes can be programmed using logic and simple math to create and scale an entire range of parts, or create a stock part that can be scaled to any size a customer demands.

Since about 2006, the idea has been suggested and pursued to foster the convergence with CNC and DNC of several trends elsewhere in the world of information technology that have not yet much affected CNC and DNC. One of these trends is the combination of greater data collection (more sensors), greater and more automated data exchange (via building new, open industry-standard XML schemas), and data mining to yield a new level of business intelligence and workflow automation in manufacturing. Another of these trends is the emergence of widely published APIs together with the aforementioned open data standards to encourage an ecosystem of user-generated apps and mashups, which can be both open and commercial—in other words, taking the new IT culture of app marketplaces that began in web development and smartphone app development and spreading it to CNC, DNC, and the other factory automation systems that are networked with the CNC/DNC. MTConnect is a leading effort to bring these ideas into successful implementation.

Description

Modern CNC mills differ little in concept from the original model built at MIT in 1952. Mills typically consist of a table that moves in the X and Y axes, and a tool spindle that moves in the Z (depth). The position of the tool is driven by motors through a series of step-down gears in order to provide highly accurate movements, or in modern designs, direct-drive stepper motors. Closed-loop control is not mandatory today, as open-loop control works as long as the forces are kept small enough.

As the controller hardware evolved, the mills themselves also evolved. One change has been to enclose the entire mechanism in a large box as a safety measure, often with additional safety interlocks to ensure the operator is far enough from the working piece for safe operation. Most new CNC systems built today are completely electronically controlled.

CNC-like systems are now used for any process that can be described as a series of movements and operations. These include laser cutting, welding, friction stir welding, ultrasonic welding, flame and plasma cutting, bending, spinning, pinning, gluing, fabric cutting, sewing, tape and fiber placement, routing, picking and placing (PnP), and sawing.

Tools with CNC variants

- Drills

- EDMs

- Lathes

- Milling machines

- Wood routers

- Sheet metal works (Turret Punch)

- Wire bending machines

- Hot-wire foam cutters

- Plasma cuttings

- Water jet cutters

- Laser cutting

- Oxy-fuel

- Surface grinders

- Cylindrical grinders

- 3D Printing

- Induction hardening machines

Tool / machine crashing

In CNC, a "crash" occurs when the machine moves in such a way that is harmful to the machine, tools, or parts being machined, sometimes resulting in bending or breakage of cutting tools, accessory clamps, vises, and fixtures, or causing damage to the machine itself by bending guide rails, breaking drive screws, or causing structural components to crack or deform under strain. A mild crash may not damage the machine or tools, but may damage the part being machined so that it must be scrapped.

Many CNC tools have no inherent sense of the absolute position of the table or tools when turned on. They must be manually "homed" or "zeroed" to have any reference to work from, and these limits are just for figuring out the location of the part to work with it, and aren't really any sort of hard motion limit on the mechanism. It is often possible to drive the machine outside the physical bounds of its drive mechanism, resulting in a collision with itself or damage to the drive mechanism.

Many CNC tools also don't know anything about their working environment. They often lack any form of sensory capability to detect problems with the machining process, and will not abort if something goes wrong. They blindly follow the machining code provided and it is up to an operator to detect if a crash is either occurring or about to occur, and for the operator to manually abort the cutting process.

If the drive system is weaker than the machine structural integrity, then the drive system simply pushes against the obstruction and the drive motors "slip in place". The machine tool may not detect the collision or the slipping, so for example the tool should now be at 210mm on the X axis but is in fact at 32mm where it hit the obstruction and kept slipping. All of the next tool motions will be off by -178mm on the X axis, and all future motions are now invalid, which may result in further collisions with clamps, vises, or the machine itself.

Collision detection and avoidance is possible, through the use of absolute position sensors (optical encoder strips or disks) to verify that motion occurred, or torque sensors or power-draw sensors on the drive system to detect abnormal strain when the machine should just be moving and not cutting, but these are not a common component of most CNC tools.

Instead, most CNC tools simply rely on the assumed accuracy of stepper motors that rotate a specific number of degrees in response to magnetic field changes. It is often assumed the stepper is perfectly accurate and never mis-steps, so tool position monitoring simply involves counting the number of pulses sent to the stepper over time. An alternate means of stepper position monitoring is usually not available, so crash or slip detection is not possible.

Numerical accuracy vs Equipment backlash

Within the numerical systems of CNC programming it is possible for the code generator to assume that the controlled mechanism is always perfectly accurate, or that accuracy tolerances are identical for all cutting or movement directions. This is not always a true condition of CNC tools.

CNC tools with a large amount of mechanical backlash can still be highly accurate if the drive or cutting mechanism is only driven so as to apply cutting force from one direction, and all driving systems are pressed tight together in that one cutting direction. However a CNC device with high backlash and a dull cutting tool can lead to cutter chatter and possible workpiece gouging. Backlash also affects accuracy of some operations involving axis movement reversals during cutting, such as the milling of a circle, where axis motion is sinusoidal. However, this can be compensated for if the amount of backlash is precisely known by linear encoders or manual measurement.

The high backlash mechanism itself is not necessarily relied on to be repeatably accurate for the cutting process, but some other reference object or precision surface may be used to zero the mechanism, by tightly applying pressure against the reference and setting that as the zero reference for all following CNC-encoded motions. This is similar to the manual machine tool method of clamping a micrometer onto a reference beam and adjusting the vernier dial to zero using that object as the reference.

Design for manufacturability for CNC machining

Design for manufacturability (DFM) describes the process of designing or engineering a product in order to facilitate the manufacturing process in order to reduce its manufacturing costs. DFM will allow potential problems to be fixed in the design phase which is the least expensive place to address them. The design of the component can have an enormous effect on the cost of manufacturing. Other factors may affect the manufacturability such as the type of raw material, the form of the raw material, dimensional tolerances, and secondary processing such as finishing.

Material type

The most easily machined types of metals include aluminum, brass, magnesium, and softer metals. As materials get harder, denser and stronger, such as steel, stainless steel, titanium, and exotic alloys, they become much harder to machine and take much longer, thus being less manufacturable. Most types of plastic are easy to machine, although additions of fiberglass or carbon fiber can reduce the machinability. Plastics that are particularly soft and gummy may have machinability problems of their own.

Material form

Metals come in all forms. In the case of aluminum as an example, bar stock and plate are the two most common forms from which machined parts are made. The size and shape of the component may determine which form of material must be used. It is common for engineering drawings to specify one form over the other. Bar stock is generally close to 1/2 of the cost of plate on a per pound basis. So although the material form isn't directly related to the geometry of the component, cost can be removed at the design stage by specifying the least expensive form of the material.

Tolerances

A significant contributing factor to the cost of a machined component is the geometric tolerance to which the features must be made. The tighter the tolerance required, the more expensive the component will be to machine. When designing, specify the loosest tolerance that will serve the function of the component. Tolerances must be specified on a feature by feature basis. There are creative ways to engineer components with lower tolerances that still perform as well as ones with higher tolerances.

Design and shape

As machining is a subtractive process, the time to remove the material is a major factor in determining the machining cost. The volume and shape of the material to be removed as well as how fast the tools can be fed will determine the machining time. When using milling cutters, the strength and stiffness of the tool which is determined in part by the length to diameter ratio of the tool will play the largest role in determining that speed. The shorter the tool is relative to its diameter the faster it can be fed through the material. A ratio of 3:1 (L:D) or under is optimum. If that ratio cannot be achieved, a solution like this depicted here can be used. For holes, the length to diameter ratio of the tools are less critical, but should still be kept under 10:1.

There are many other types of features which are more or less expensive to machine. Generally chamfers cost less to machine than radii on outer horizontal edges. Undercuts are more expensive to machine. Features that require smaller tools, regardless of L:D ratio, are more expensive.

Computer-aided technologies

Computer-aided technologies (CAx) is a broad term that means the use of computer technology to aid in the design, analysis, and manufacture of products.

Advanced CAx tools merge many different aspects of the product lifecycle management (PLM), including design, finite element analysis (FEA), manufacturing, production planning, product testing with virtual lab models and visualization, product documentation, product support, etc. CAx encompasses a broad range of tools, both those commercially available and those proprietary to individual engineering firms.

The term CAD/CAM (computer-aided design and computer-aided manufacturing) is also often used in the context of a software tool that covers a number of engineering functions.

List of computer-aided technologies

- Computer-aided design (CAD)

Illustration of the interaction of the various computer-aided technologies - Computer-aided architectural design (CAAD)

- Computer-aided design and drafting (CADD)

CAx tools in the context of product lifecycle managemen

- Computer-aided process planning(CAPP)

- Computer-aided quality assurance (CAQ)

- Computer-aided reporting (CAR)

Simulation of airflow over an en - Computer-aided requirements capture (CAR)

- Computer-aided rule definition (CARD)

- Computer-aided rule execution (CARE)

- Computer-aided software engineering (CASE)

- Component information system (CIS)

- Computer-integrated manufacturing (CIM)

- computer numerical controlled (CNC)

- Computational fluid dynamics (CFD)

- Electronic design automation (EDA)

- Enterprise resource planning (ERP)

- Finite element analysis (FEA)

- Knowledge-based engineering (KBE)

- Manufacturing process management (MPM)

- Manufacturing process planning (MPP)

- Material requirements planning (MRP)

- Manufacturing resource planning (MRP II)

- Product data management (PDM)

A CAD model - Product lifecycle management (PLM)

- Computer-aided manufacturing (CAM)

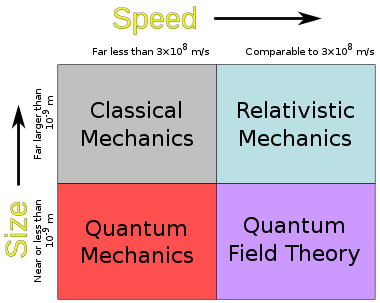

Mechatronics

It is an engineering discipline which deals with the convergence of electrical and mechanical and

|

| Training FMS with learning robot SCORBOT-ER 4u, workbench CNC Mill and CNC Lathe |

manufacturing systems. Such combined systems are known as electromechanical systems and have widespread adoption. Examples include automated manufacturing systems, heating, ventilation and air-conditioning systems and various subsystems of aircraft and automobiles.

The term mechatronics is typically used to refer to macroscopic systems but futurists have predicted the emergence of very small electromechanical devices. Already such small devices, known as Microelectromechanical systems (MEMS), are used in automobiles to tell airbags when to deploy, in digital projectors to create sharper images and in inkjet printers to create nozzles for high definition printing. In the future it is hoped the devices will help build tiny implantable medical devices and improve optical communication.

Work

Manufacturing engineering is just one facet of the engineering industry. Manufacturing engineers enjoy improving the production process from start to finish. They have the ability to keep the whole production process in mind as they zero in on a particular portion of the process. Successful students in manufacturing engineering degree programs are inspired by the notion of starting with a natural resource, such as a block of wood, and ending with a usable, valuable product, such as a desk.

The manufacturing engineers are closely connected with engineering and industrial design. Examples of major companies which employee manufacturing engineers in the United States include General Motors Corporation, Ford Motor Company, Chrysler, Boeing, Gates Corporation and Pfizer. Examples in Europe include Airbus, Daimler, BMW, Fiat, and Michelin Tyre.

Some industries where manufacturing engineers are generally employed:

- Aerospace industry

- Automotive industry

- Chemical industry

- Computer industry

- Electronics industry

- Food processing industry

- Garment industry

- Pharmaceutical industry

- Pulp and paper industry

- Toy industry

Frontiers of research

Flexible Manufacturing Systems

|

| A typical FMS systems |

A flexible manufacturing system (FMS) is a manufacturing system in which there is some amount of flexibility that allows the system to react in the case of changes, whether predicted or unpredicted. This flexibility is generally considered to fall into two categories, which both contain numerous subcategories. The first category, machine flexibility, covers the system's ability to be changed to produce new product types, and ability to change the order of operations executed on a part. The second category is called routing flexibility, which consists of the ability to use multiple machines to perform the same operation on a part, as well as the system's ability to absorb large-scale changes, such as in volume, capacity, or capability. Most FMS systems comprise of three main systems. The work machines which are often automated CNC machines are connected by a material handling system to optimize parts flow and the central control computer which controls material movements and machine flow. The main advantages of an FMS is its high flexibility in managing manufacturing resources like time and effort in order to manufacture a new product. The best application of an FMS is found in the production of small sets of products like those from a mass production.

Computer integrated manufacturing

Computer-integrated manufacturing (CIM) in engineering is a method of manufacturing in which the entire production process is controlled by computer. The traditional separated process methods are joined through a computer by CIM. This integration allows that the processes exchange information with each other and they are able to initiate actions. Through this integration, manufacturing can be faster and less error-prone, although the main advantage is the ability to create automated manufacturing processes. Typically CIM relies on closed-loop control processes, based on real-time input from sensors. It is also known as flexible design and manufacturing.

Friction stir welding

Friction stir welding, a new type of welding, was discovered in 1991 by The Welding Institute (TWI). This

|

| Close-up view of a friction stir weld tack tool |

innovative steady state (non-fusion) welding technique joins materials previously un-weldable, including several aluminum alloys. It may play an important role in the future construction of airplanes, potentially replacing rivets. Current uses of this technology to date include welding the seams of the aluminum main Space Shuttle external tank, Orion Crew Vehicle test article, Boeing Delta II and Delta IV Expendable Launch Vehicles and the SpaceX Falcon 1 rocket, armor plating for amphibious assault ships, and welding the wings and fuselage panels of the new Eclipse 500 aircraft from Eclipse Aviation among an increasingly growing pool of uses.

The Other Areas of Research are :

Product Design, MEMS (Micro-Electro-Mechanical Systems), Lean Manufacturing,Intelligent Manufacturing Systems,Green Manufacturing, Precision Engineering,Smart Materials etc.

Mechanics

Mechanics is the branch of physics concerned with the behavior of physical bodies when subjected to forces or displacements, and the subsequent effects of the bodies on their environment. The discipline has its roots in several ancient civilizations (see History of classical mechanics and Timeline of classical mechanics). During the early modern period, scientists such as Galileo, Kepler, and especially Newton, laid the foundation for what is now known as classical mechanics.

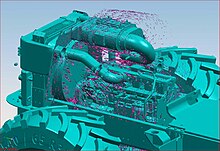

The system of study of mechanics is shown in the table below:

Classical versus quantum

The major division of the mechanics discipline separates classical mechanics from quantum mechanics.

Historically, classical mechanics came first, while quantum mechanics is a comparatively recent invention. Classical mechanics originated with Isaac Newton's Laws of motion in Principia Mathematica, while quantum mechanics didn't appear until 1900. Both are commonly held to constitute the most certain knowledge that exists about physical nature. Classical mechanics has especially often been viewed as a model for other so-called exact sciences. Essential in this respect is the relentless use of mathematics in theories, as well as the decisive role played by experiment in generating and testing them.

Quantum mechanics is of a wider scope, as it encompasses classical mechanics as a sub-discipline which applies under certain restricted circumstances. According to the correspondence principle, there is no contradiction or conflict between the two subjects, each simply pertains to specific situations. The correspondence principle states that the behavior of systems described by quantum theories reproduces classical physics in the limit of large quantum numbers. Quantum mechanics has superseded classical mechanics at the foundational level and is indispensable for the explanation and prediction of processes at molecular and (sub)atomic level. However, for macroscopic processes classical mechanics is able to solve problems which are unmanageably difficult in quantum mechanics and hence remains useful and well used. Modern descriptions of such behavior begin with a careful definition of such quantities as displacement (distance moved), time, velocity, acceleration, mass, and force. Until about 400 years ago, however, motion was explained from a very different point of view. For example, following the ideas of Greek philosopher and scientist Aristotle, scientists reasoned that a cannonball falls down because its natural position is in the earth; the sun, the moon, and the stars travel in circles around the earth because it is the nature of heavenly objects to travel in perfect circles.

The Italian physicist and astronomer Galileo brought together the ideas of other great thinkers of his time and began to analyze motion in terms of distance traveled from some starting position and the time that it took. He showed that the speed of falling objects increases steadily during the time of their fall. This acceleration is the same for heavy objects as for light ones, provided air friction (air resistance) is discounted. The English mathematician and physicist Sir Isaac Newton improved this analysis by defining force and mass and relating these to acceleration. For objects traveling at speeds close to the speed of light, Newton’s laws were superseded by Albert Einstein’s theory of relativity. For atomic and subatomic particles, Newton’s laws were superseded by quantum theory. For everyday phenomena, however, Newton’s three laws of motion remain the cornerstone of dynamics, which is the study of what causes motion.

Einsteinian versus Newtonian

For example,

In Newtonian Mechanics,

F=ma

whereas in Einsteinian Mechanics and Lorentz Transformations, which were first discovered by Hendrick Lorentz,

F=γma

where γ is the Lorentz Factor

Einsteinian vs. Quantum

Relativistic corrections are also needed for quantum mechanics, although General relativity has not been integrated; the two theories remain incompatible, a hurdle which must be overcome in developing the Grand Unified Theory or Theory of Everything.

Antiquity

The main theory of mechanics in antiquity was Aristotelian mechanics. A later developer in this tradition was Hipparchus.

Aristotelian Physics the natural sciences, are described in the works of the Greek philosopher Aristotle (384 BC – 322 BC). In the Physics, Aristotle established general principles of change that govern all natural bodies; both living and inanimate, celestial and terrestrial—including all motion, change in respect to place, change in respect to size or number, qualitative change of any kind, and coming to be and passing away. As Martin Heidegger, one of the foremost philosophers of the twentieth century, once wrote,Aristotelian "physics" is different from what we mean today by this word, not only to the extent that it belongs to antiquity whereas the modern physical sciences belong to modernity, rather above all it is different by virtue of the fact that Aristotle's "physics" is philosophy, whereas modern physics is a positive science that presupposes a philosophy.... This book determines the warp and woof of the whole of Western thinking, even at that place where it, as modern thinking, appears to think at odds with ancient thinking. But opposition is invariably comprised of a decisive, and often even perilous, dependence. Without Aristotle's Physics there would have been no Galileo.To Aristotle, physics is a broad term that includes all nature sciences, such as philosophy of mind, body, sensory experience, memory and biology, and constitutes the foundational thinking underlying many of his works.

Ancient concepts

Some concepts involved in Aristotle's physics are:- Teleology: Aristotle observes that natural things tend toward definite goals or ends insofar as they are natural. Regularities manifest a rudimentary kind of teleology.

- Natural motion: Terrestrial objects tend toward a different part of the universe according to their composition of the four elements. For example, earth, the heaviest element, tends toward the center of the universe—hence the reason for the Earth being at the center. At the opposite extreme the lightest element, fire, tends upward, away from the center. The relative proportion of the four elements composing an object determines its motion. The elements are not proper substances in Aristotelian theory or the modern sense of the word. Refining an arbitrarily pure sample of an element isn't possible; They were abstractions; one might consider an arbitrarily pure sample of a terrestrial substance having a large ratio of one element relative to the others.

- Terrestrial motion: Terrestrial objects move downward or upward toward their natural place. Motion from side to side results from the turbulent collision and sliding of the objects as well as transformations between the elements, (generation and corruption).

- Rectilinear motion: Ideal terrestrial motion would proceed straight up or straight down at constant speed. Celestial motion is always ideal, it is circular and its speed is constant.

- Speed, weight and resistance: The ideal speed of a terrestrial object is directly proportional to its weight. In nature, however, the matter obstructing an object's path is a limiting factor that's inversely proportional to the viscosity of the medium.

- Vacuum isn't possible: Vacuum doesn't occur, but hypothetically, terrestrial motion in a vacuum would be indefinitely fast.

- Continuum: Aristotle argues against the indivisibles of Democritus (which differ considerably from the historical and the modern use of the term atom).

- Aether: The "greater and lesser lights of heaven", (the sun, moon, planets and stars), are embedded in perfectly concentric crystal spheres that rotate eternally at fixed rates. Because the spheres never change and (meteorites notwithstanding) don't fall down or rise up from the ground, they cannot be composed of the four terrestrial elements. Much as Homer's æthere (αἰθήρ), the "pure air" of Mount Olympus was the divine counterpart of the air (άήρ, aer) breathed by mortals, the celestial spheres are composed of a special element, eternal and unchanging, with circular natural motion.

- Terrestrial change: Unlike the eternal and unchanging celestial aether, each of the four terrestrial elements are capable of changing into either of the two elements they share a property with: e.g. the cold and wet (water) can transform into the hot and wet (air) or the cold and dry (earth) and any apparent change into the hot and dry (fire) is actually a two step process. These properties are predicated of an actual substance relative to the work it's able to do; that of heating or chilling and of desiccating or moistening. The four elements exist only with regard to this capacity and relative to some potential work. The celestial element is eternal and unchanging, so only the four terrestrial elements account for coming to be and passing away; also called "generation and corruption" after the Latin title of Aristotle's De Generatione et Corruptione (Περὶ γενέσεως καὶ φθορᾶ).

- Celestial motion: The crystal spheres carrying the sun, moon and stars move eternally with unchanging circular motion. They're composed of solid aether and no gaps exist between the spheres. Spheres are embedded within spheres to account for the wandering stars, (i.e. the modern planets, which appear to move erratically in comparison to the sun, moon and stars). Later, the belief that all spheres are concentric was forsaken in favor of Ptolemy's deferent and epicycle. Aristotle submits to the calculations of astronomers regarding the total number of spheres and various accounts give a number in the neighborhood of 50 spheres. An unmoved mover is assumed for each sphere, including a prime mover for the sphere of fixed stars. The unmoved movers do not push the spheres (nor could they, they're insubstantial and dimensionless); rather, they're the final cause of the motion, meaning they explain it in a way that's similar to the explanation "the soul is moved by beauty". They simply "think about thinking", eternally without change, which is the idea of "being qua being" in Aristotle reformulation of Plato's theory.

Elements

Aristotle taught that the elements which compose the Earth are different from the one that composes the heavens. He believed that four elements make up everything under the moon (the terrestrial): earth, air, fire and water. He also held that the heavens are made of a special, fifth element called "aether", which is weightless and "incorruptible" (which is to say, it doesn't change). Aether is also known by the name "quintessence"—literally, "fifth substance".He considered heavy substances such as iron and other metals to consist primarily of the element earth, with a smaller amount of the other three terrestrial elements. Other, lighter objects, he believed, have less earth, relative to the other three elements in their composition.

Motion

Aristotle held that each of the four terrestrial (or worldly) elements move toward their natural place, and that this natural motion would proceed unless hindered. For instance, because smoke is mainly air, it rises toward the sky but not as high as fire. He also taught that objects move against their natural motion only when forced (i.e. pushed) in a different direction and only while that force is being applied. This idea had flaws that were apparent to Aristotle and his contemporaries. It was questionable, for example, how an arrow would continue to fly forward after leaving the bowstring; which could no longer be forcing it forward. In response, Aristotle suggested the air behind an arrow in flight is thinned and the surrounding air, rushing in to fill that potential vacuum, is what pushes it forward. This was consistent with his explanation of a medium, such as air or water, causing resistance to the motion of an object passing through it. The turbulent motion of air around an arrow in flight is very complicated, and still not fully understood.A vacuum, or void, is a place free of everything, and Aristotle argued against the possibility. Aristotle believed that the speed of an object's motion is proportional to the force being applied (or the object's weight in the case of natural motion) and inversely proportional to the viscosity of the medium; the more tenuous a medium is, the faster the motion. He reasoned that objects moving in a void, could move indefinitely fast and thus, the objects surrounding a void would immediately fill it before it could actually form.

Natural place

The Aristotelian explanation of gravity is that all bodies move toward their natural place. For the element earth, that place is the center of the (geocentric) universe, next comes the natural place of water (in a concentric shell around that of earth). The natural place of air is likewise a concentric shell surrounding the place of water. Sea level is between those two. Finally, the natural place of fire is higher than that of air but below the innermost celestial sphere, (the one carrying the Moon). Even at locations well above sea level, such as a mountain top, an object made mostly of the former two elements tends to fall and objects made mostly of the latter two tend to rise.Medieval commentary

The theory of impetus was an auxiliary or secondary theory of Aristotelian dynamics, put forth initially to explain projectile motion against gravity. It was introduced by Jean Buridan (14th century), which became an ancestor to the concepts of inertia, momentum and acceleration in classical mechanics.The problem of projectile motion in Aristotelian dynamics