Teknik Mesin (Mechanical Engineering) atau Teknik Mekanika adalah ilmu teknik dalam pengertian yang sangat luas - mempelajari tentang aplikasi dari prinsip dasar pengembangan ilmu Fisika untuk analisa, desain, manufaktur dan pemeliharaan sebuah sistem mekanika. Ilmu ini membutuhkan pengertian mendalam atas konsep utama dari cabang ilmu mekanika, kinematika, termodinamika dan energi. Seorang ahli dari Teknik Mesin disebut sebagai insinyur Teknik Mesin, yang memanfaatkan pengertian atas ilmu teknik ini dalam mendesain dan menganalisa pembuatan kendaraan, pesawat terbang, pabrik industri, peralatan dan mesin industri dan bidang lainnya yang berkembang dari Teknik Mesin. teknik mesin terdiri dari :

- Konversi Energi (Energy Conversion)

- Perancangan Mesin (Mechanical Design)

- Ilmu dan Teknik Material (Material Science and Engineering)

- Teknik Produksi Mesin (Mechanical Production Engineering)

Mechanical engineering is a discipline of engineering that applies the principles of physics and materials science for analysis, design, manufacturing, and maintenance of mechanical systems. It is the branch of engineering that involves the production and usage of heat and mechanical power for the design, production, and operation of machines and tools. It is one of the oldest and broadest engineering disciplines.

The engineering field requires an understanding of core concepts including mechanics, kinematics, thermodynamics, materials science, and structural analysis. Mechanical engineers use these core principles along with tools like computer-aided engineering and product lifecycle management to design and analyze manufacturing plants, industrial equipment and machinery, heating and cooling systems, transport systems, aircraft, watercraft, robotics, medical devices and more.

Mechanical engineering emerged as a field during the industrial revolution in Europe in the 18th century; however, its development can be traced back several thousand years around the world. Mechanical engineering science emerged in the 19th century as a result of developments in the field of physics. The field has continually evolved to incorporate advancements in technology, and mechanical engineers today are pursuing developments in such fields as composites, mechatronics, and nanotechnology. Mechanical engineering overlaps with aerospace engineering, civil engineering, electrical engineering, petroleum engineering, and chemical engineering to varying amounts.

Development

Applications of mechanical engineering are found in the records of many ancient and medieval societies throughout the globe. In ancient Greece, the works of Archimedes (287 BC–212 BC) deeply influenced mechanics in the Western tradition and Heron of Alexandria (c. 10–70 AD) created the first steam engine. In China, Zhang Heng (78–139 AD) improved a water clock and invented a seismometer, and Ma Jun (200–265 AD) invented a chariot with differential gears. The medieval Chinese horologist and engineer Su Song (1020–1101 AD) incorporated an escapement mechanism into his astronomical clock tower two centuries before any escapement can be found in clocks of medieval Europe, as well as the world's first known endless power-transmitting chain drive.

During the years from 7th to 15th century, the era called the Islamic Golden Age, there were remarkable contributions from Muslim inventors in the field of mechanical technology. Al-Jazari, who was one of them, wrote his famous Book of Knowledge of Ingenious Mechanical Devices in 1206, and presented many mechanical designs. He is also considered to be the inventor of such mechanical devices which now form the very basic of mechanisms, such as the crankshaft and camshaft.

Important breakthroughs in the foundations of mechanical engineering occurred in England during the 17th century when Sir Isaac Newton both formulated the three Newton's Laws of Motion and developed calculus. Newton was reluctant to publish his methods and laws for years, but he was finally persuaded to do so by his colleagues, such as Sir Edmund Halley, much to the benefit of all mankind.

During the early 19th century in England, Germany and Scotland, the development of machine tools led mechanical engineering to develop as a separate field within engineering, providing manufacturing machines and the engines to power them. The first British professional society of mechanical engineers was formed in 1847 Institution of Mechanical Engineers, thirty years after the civil engineers formed the first such professional society Institution of Civil Engineers. On the European continent, Johann Von Zimmermann (1820–1901) founded the first factory for grinding machines in Chemnitz (Germany) in 1848.

In the United States, the American Society of Mechanical Engineers (ASME) was formed in 1880, becoming the third such professional engineering society, after the American Society of Civil Engineers (1852) and the American Institute of Mining Engineers (1871). The first schools in the United States to offer an engineering education were the United States Military Academy in 1817, an institution now known as Norwich University in 1819, and Rensselaer Polytechnic Institute in 1825. Education in mechanical engineering has historically been based on a strong foundation in mathematics and science.

Education

Degrees in mechanical engineering are offered at universities worldwide. In Brazil, Ireland, China, Greece, Turkey, North America, South Asia, India and the United Kingdom, mechanical engineering programs typically take four to five years of study and result in a Bachelor of Science (B.Sc), Bachelor of Science Engineering (B.ScEng), Bachelor of Engineering (B.Eng), Bachelor of Technology (B.Tech), or Bachelor of Applied Science (B.A.Sc) degree, in or with emphasis in mechanical engineering. In Spain, Portugal and most of South America, where neither BSc nor BTech programs have been adopted, the formal name for the degree is "Mechanical Engineer", and the course work is based on five or six years of training. In Italy the course work is based on five years of training, but in order to qualify as an Engineer you have to pass a state exam at the end of the course.

In Australia, mechanical engineering degrees are awarded as Bachelor of Engineering (Mechanical). The degree takes four years of full time study to achieve. To ensure quality in engineering degrees, the Australian Institution of Engineers accredits engineering degrees awarded by Australian universities. Before the degree can be awarded, the student must complete at least 3 months of on the job work experience in an engineering firm.

In the United States, most undergraduate mechanical engineering programs are accredited by the Accreditation Board for Engineering and Technology (ABET) to ensure similar course requirements and standards among universities. The ABET web site lists 276 accredited mechanical engineering programs as of June 19, 2006. Mechanical engineering programs in Canada are accredited by the Canadian Engineering Accreditation Board (CEAB), and most other countries offering engineering degrees have similar accreditation societies.

Some mechanical engineers go on to pursue a postgraduate degree such as a Master of Engineering (M.Eng.), Master of Technology, Master of Science (M.Sc.), Master of Engineering Management (M.E.M), a Doctor of Philosophy in engineering (Eng.D., Ph.D) or an engineer's degree. The master's and engineer's degrees may or may not include research. The Doctor of Philosophy includes a significant research component and is often viewed as the entry point to academia. The Engineer's degree exists at a few institutions at an intermediate level between the master's degree and the doctorate.

Coursework

Standards set by each country's accreditation society are intended to provide uniformity in fundamental subject material, promote competence among graduating engineers, and to maintain confidence in the engineering profession as a whole. Engineering programs in the U.S., for example, are required by ABET to show that their students can "work professionally in both thermal and mechanical systems areas. "The specific courses required to graduate, however, may differ from program to program. Universities and Institutes of technology will often combine multiple subjects into a single class or split a subject into multiple classes, depending on the faculty available and the university's major area(s) of research.

The fundamental subjects of mechanical engineering usually include:

- Statics and Dynamics

- Strength of materials and Solid mechanics

- Instrumentation and Measurement

- Electrotechnology

- Electronics

- Thermodynamics, Heat transfer, Energy conversion, and HVAC (Heating, Ventilation, and Air Conditioning)

- Combustion, Automotive engines, Fuels

- Fluid mechanics and Fluid dynamics

- Mechanism design (including kinematics and dynamics)

- Manufacturing engineering, technology, or processes

- Hydraulics and pneumatics

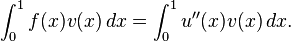

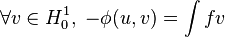

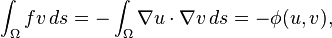

- Mathematics - in particular, calculus, differential equations, and linear algebra.

- Engineering design

- Product design

- Mechatronics and control theory

- Material Engineering

- Design engineering, Drafting, computer-aided design (CAD) (including solid modeling), and computer-aided manufacturing (CAM)

Mechanical engineers are also expected to understand and be able to apply basic concepts from chemistry, physics, chemical engineering, civil engineering, and electrical engineering. Most mechanical engineering programs include multiple semesters of calculus, as well as advanced mathematical concepts including differential equations, partial differential equations, linear algebra, abstract algebra, and differential geometry, among others.

In addition to the core mechanical engineering curriculum, many mechanical engineering programs offer more specialized programs and classes, such as robotics, transport and logistics, cryogenics, fuel technology, automotive engineering, biomechanics, vibration, optics and others, if a separate department does not exist for these subjects.

Most mechanical engineering programs also require varying amounts of research or community projects to gain practical problem-solving experience. In the United States it is common for mechanical engineering students to complete one or more internships while studying, though this is not typically mandated by the university. Cooperative education is another option.

License

Engineers may seek license by a state, provincial, or national government. The purpose of this process is to ensure that engineers possess the necessary technical knowledge, real-world experience, and knowledge of the local legal system to practice engineering at a professional level. Once certified, the engineer is given the title of Professional Engineer (in the United States, Canada, Japan, South Korea, Bangladesh and South Africa), Chartered Engineer (in the United Kingdom, Ireland, India and Zimbabwe), Chartered Professional Engineer (in Australia and New Zealand) or European Engineer (much of the European Union). Not all mechanical engineers choose to become licensed; those that do can be distinguished as Chartered or Professional Engineers by the post-nominal title P.E., P.Eng., or C.Eng., as in: Mike Thompson, P.Eng.

In the U.S., to become a licensed Professional Engineer, an engineer must pass the comprehensive FE (Fundamentals of Engineering) exam, work a given number of years as an Engineering Intern (EI) or Engineer-in-Training (EIT), and finally pass the "Principles and Practice" or PE (Practicing Engineer or Professional Engineer) exams.

In the United States, the requirements and steps of this process are set forth by the National Council of Examiners for Engineering and Surveying (NCEES), a national non-profit representing all states. In the UK, current graduates require a B.Eng. plus an appropriate masters degree or an integrated M.Eng. degree, a minimum of 4 years post graduate on the job competency development, and a peer reviewed project report in the candidates specialty area in order to become chartered through the Institution of Mechanical Engineers.

In most modern countries, certain engineering tasks, such as the design of bridges, electric power plants, and chemical plants, must be approved by a Professional Engineer or a Chartered Engineer. "Only a licensed engineer, for instance, may prepare, sign, seal and submit engineering plans and drawings to a public authority for approval, or to seal engineering work for public and private clients." This requirement can be written into state and provincial legislation, such as in the Canadian provinces, for example the Ontario or Quebec's Engineer Act.

In other countries, such as Australia, no such legislation exists; however, practically all certifying bodies maintain a code of ethics independent of legislation that they expect all members to abide by or risk expulsion.

Salaries and workforce statistics

The total number of engineers employed in the U.S. in 2009 was roughly 1.6 million. Of these, 239,000 were mechanical engineers (14.9%), the second largest discipline by size behind civil (278,000). The total number of mechanical engineering jobs in 2009 was projected to grow 6% over the next decade, with average starting salaries being $58,800 with a bachelor's degree. The median annual income of mechanical engineers in the U.S. workforce was roughly $74,900. This number was highest when working for the government ($86,250), and lowest in education ($63,050).

In 2007, Canadian engineers made an average of CAD$29.83 per hour with 4% unemployed. The average for all occupations was $18.07 per hour with 7% unemployed. Twelve percent of these engineers were self-employed, and since 1997 the proportion of female engineers had risen to 6%.

Modern tools

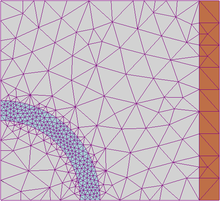

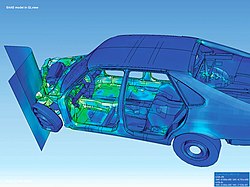

Many mechanical engineering companies, especially those in industrialized nations, have begun to incorporate Computer-Aided Engineering (CAE) programs into their existing design and analysis processes, including 2D and 3D Solid Modeling computer-aided design (CAD). This method has many benefits, including easier and more exhaustive visualization of products, the ability to create virtual assemblies of parts, and the ease of use in designing mating interfaces and tolerances.

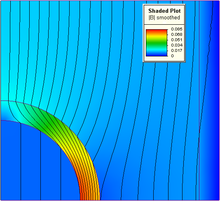

Other CAE programs commonly used by mechanical engineers include product lifecycle management (PLM) tools and analysis tools used to perform complex simulations. Analysis tools may be used to predict product response to expected loads, including fatigue life and manufacturability. These tools include finite element analysis (FEA), computational fluid dynamics (CFD), and Computer-Aided Manufacturing (CAM).

Using CAE programs, a mechanical design team can quickly and cheaply iterate the design process to develop a product that better meets cost, performance, and other constraints. No physical prototype need be created until the design nears completion, allowing hundreds or thousands of designs to be evaluated, instead of a relative few. In addition, CAE analysis programs can model complicated physical phenomena which cannot be solved by hand, such as viscoelasticity, complex contact between mating parts, or non-Newtonian flows.

As mechanical engineering begins to merge with other disciplines, as seen in mechatronics, Multidisciplinary Design Optimization (MDO) is being used with other CAE programs to automate and improve the iterative design process. MDO tools wrap around existing CAE processes, allowing product evaluation to continue even after the analyst goes home for the day. They also utilize sophisticated optimization algorithms to more intelligently explore possible designs, often finding better, innovative solutions to difficult multidisciplinary design problems.

Subdisciplines

The field of mechanical engineering can be thought of as a collection of many mechanical engineering science disciplines. Several of these subdisciplines which are typically taught at the undergraduate level are listed below, with a brief explanation and the most common application of each. Some of these subdisciplines are unique to mechanical engineering, while others are a combination of mechanical engineering and one or more other disciplines. Most work that a mechanical engineer does uses skills and techniques from several of these subdisciplines, as well as specialized subdisciplines. Specialized subdisciplines, as used in this article, are more likely to be the subject of graduate studies or on-the-job training than undergraduate research. Several specialized subdisciplines are discussed in this section.

Mechanics

Mechanics is, in the most general sense, the study of forces and their effect upon matter. Typically, engineering mechanics is used to analyze and predict the acceleration and deformation (both elastic and plastic) of objects under known forces (also called loads) or stresses. Subdisciplines of mechanics include

- Statics, the study of non-moving bodies under known loads, how forces affect static bodies

- Dynamics (or kinetics), the study of how forces affect moving bodies

- Mechanics of materials, the study of how different materials deform under various types of stress

- Fluid mechanics, the study of how fluids react to forces

- Continuum mechanics, a method of applying mechanics that assumes that objects are continuous (rather than discrete)

Mechanical engineers typically use mechanics in the design or analysis phases of engineering. If the engineering project were the design of a vehicle, statics might be employed to design the frame of the vehicle, in order to evaluate where the stresses will be most intense. Dynamics might be used when designing the car's engine, to evaluate the forces in the pistons and cams as the engine cycles. Mechanics of materials might be used to choose appropriate materials for the frame and engine. Fluid mechanics might be used to design a ventilation system for the vehicle (see HVAC), or to design the intake system for the engine.

Kinematics

Kinematics is the study of the motion of bodies (objects) and systems (groups of objects), while ignoring the forces that cause the motion. The movement of a crane and the oscillations of a piston in an engine are both simple kinematic systems. The crane is a type of open kinematic chain, while the piston is part of a closed four-bar linkage.

Mechanical engineers typically use kinematics in the design and analysis of mechanisms. Kinematics can be used to find the possible range of motion for a given mechanism, or, working in reverse, can be used to design a mechanism that has a desired range of motion.

Mechatronics and robotics

|

| Training FMS with learning robot SCORBOT-ER 4u,workbench CNC Mill & CNC Lathe |

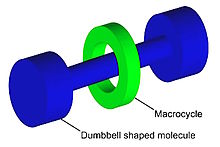

Mechatronics is an interdisciplinary branch of mechanical engineering, electrical engineering and software engineering that is concerned with integrating electrical and mechanical engineering to create hybrid systems. In this way, machines can be automated through the use of electric motors, servo-mechanisms, and other electrical systems in conjunction with special software. A common example of a mechatronics system is a CD-ROM drive. Mechanical systems open and close the drive, spin the CD and move the laser, while an optical system reads the data on the CD and converts it to bits. Integrated software controls the process and communicates the contents of the CD to the computer.

Robotics is the application of mechatronics to create robots, which are often used in industry to perform tasks that are dangerous, unpleasant, or repetitive. These robots may be of any shape and size, but all are preprogrammed and interact physically with the world. To create a robot, an engineer typically employs kinematics (to determine the robot's range of motion) and mechanics (to determine the stresses within the robot).

Robots are used extensively in industrial engineering. They allow businesses to save money on labor, perform tasks that are either too dangerous or too precise for humans to perform them economically, and to insure better quality. Many companies employ assembly lines of robots,especially in Automotive Industries and some factories are so robotized that they can run by themselves. Outside the factory, robots have been employed in bomb disposal, space exploration, and many other fields. Robots are also sold for various residential applications.

Structural analysis

Structural analysis is the branch of mechanical engineering (and also civil engineering) devoted to examining why and how objects fail and to fix the objects and their performance. Structural failures occur in two general modes: static failure, and fatigue failure. Static structural failure occurs when, upon being loaded (having a force applied) the object being analyzed either breaks or is deformed plastically, depending on the criterion for failure. Fatigue failure occurs when an object fails after a number of repeated loading and unloading cycles. Fatigue failure occurs because of imperfections in the object: a microscopic crack on the surface of the object, for instance, will grow slightly with each cycle (propagation) until the crack is large enough to cause ultimate failure.

Failure is not simply defined as when a part breaks, however; it is defined as when a part does not operate as intended. Some systems, such as the perforated top sections of some plastic bags, are designed to break. If these systems do not break, failure analysis might be employed to determine the cause.

Structural analysis is often used by mechanical engineers after a failure has occurred, or when designing to prevent failure. Engineers often use online documents and books such as those published by ASM to aid them in determining the type of failure and possible causes.

Structural analysis may be used in the office when designing parts, in the field to analyze failed parts, or in laboratories where parts might undergo controlled failure tests.

Thermodynamics and thermo-science

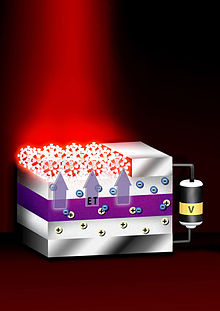

Thermodynamics is an applied science used in several branches of engineering, including mechanical and chemical engineering. At its simplest, thermodynamics is the study of energy, its use and transformation through a system. Typically, engineering thermodynamics is concerned with changing energy from one form to another. As an example, automotive engines convert chemical energy (enthalpy) from the fuel into heat, and then into mechanical work that eventually turns the wheels.

Thermodynamics principles are used by mechanical engineers in the fields of heat transfer, thermofluids, and energy conversion. Mechanical engineers use thermo-science to design engines and power plants, heating, ventilation, and air-conditioning (HVAC) systems, heat exchangers, heat sinks, radiators, refrigeration, insulation, and others.

Design and Drafting

Drafting or technical drawing is the means by which mechanical engineers design products and create instructions for manufacturing parts. A technical drawing can be a computer model or hand-drawn schematic showing all the dimensions necessary to manufacture a part, as well as assembly notes, a list of required materials, and other pertinent information. A U.S. mechanical engineer or skilled worker who creates technical drawings may be referred to as a drafter or draftsman. Drafting has historically been a two-dimensional process, but Computer-Aided Design (CAD) programs now allow the designer to create in three dimensions.

Instructions for manufacturing a part must be fed to the necessary machinery, either manually, through programmed instructions, or through the use of a Computer-Aided Manufacturing (CAM) or combined CAD/CAM program. Optionally, an engineer may also manually manufacture a part using the technical drawings, but this is becoming an increasing rarity, with the advent of Computer Numerically Controlled (CNC) manufacturing. Engineers primarily manually manufacture parts in the areas of applied spray coatings, finishes, and other processes that cannot economically or practically be done by a machine.

Drafting is used in nearly every subdiscipline of mechanical engineering, and by many other branches of engineering and architecture. Three-dimensional models created using CAD software are also commonly used in Finite Element Analysis (FEA) and Computational Fluid Dynamics (CFD).

Frontiers of research

Mechanical engineers are constantly pushing the boundaries of what is physically possible in order to produce safer, cheaper, and more efficient machines and mechanical systems. Some technologies at the cutting edge of mechanical engineering are listed below (see also exploratory engineering).

Micro electro-mechanical systems (MEMS)

Micron-scale mechanical components such as springs, gears, fluidic and heat transfer devices are fabricated from a variety of substrate materials such as silicon, glass and polymers like SU8. Examples of MEMS components will be the accelerometers that are used as car airbag sensors, modern cell phones, gyroscopes for precise positioning and microfluidic devices used in biomedical applications.

Friction stir welding (FSW)

Friction stir welding, a new type of welding, was discovered in 1991 by The Welding Institute (TWI). This innovative steady state (non-fusion) welding technique joins materials previously un-weldable, including several aluminum alloys. It may play an important role in the future construction of airplanes, potentially replacing rivets. Current uses of this technology to date include welding the seams of the aluminum main Space Shuttle external tank, Orion Crew Vehicle test article, Boeing Delta II and Delta IV Expendable Launch Vehicles and the SpaceX Falcon 1 rocket, armor plating for amphibious assault ships, and welding the wings and fuselage panels of the new Eclipse 500 aircraft from Eclipse Aviation among an increasingly growing pool of uses.

Friction-stir welding (FSW) is a solid-state joining process (meaning the metal is not melted during the process) and is used for applications where the original metal characteristics must remain unchanged as far as possible. This process is primarily used on aluminium, and most often on large pieces which cannot be easily heat treated post weld to recover temper characteristics.

It was invented and experimentally proven by Wayne Thomas and a team of his colleagues at The Welding Institute UK in December 1991. TWI holds a number of patents on the process, the first being the most descriptive.

Friction-stir welding (FSW) is a solid-state joining process (meaning the metal is not melted during the process) and is used for applications where the original metal characteristics must remain unchanged as far as possible. This process is primarily used on aluminium, and most often on large pieces which cannot be easily heat treated post weld to recover temper characteristics.

It was invented and experimentally proven by Wayne Thomas and a team of his colleagues at The Welding Institute UK in December 1991. TWI holds a number of patents on the process, the first being the most descriptive.

Principle of operation

|

| Schematic diagram of the FSW process: (A) Two discrete metal workpieces butted together, along with the tool (with a probe |

In FSW, a cylindrical-shouldered tool, with a profiled threaded/unthreaded probe (nib or pin) is rotated at a constant speed and fed at a constant traverse rate into the joint line between two pieces of sheet or plate material, which are butted together. The parts have to be clamped rigidly onto a backing bar in a manner that prevents the abutting joint faces from being forced apart. The length of the nib is slightly less than the weld depth required and the tool shoulder should be in intimate contact with the work surface. The nib is then moved against the work, or vice versa.

Frictional heat is generated between the wear-resistant welding tool shoulder and nib, and the material of the work pieces. This heat, along with the heat generated by the mechanical mixing process and the adiabatic heat within the material, cause the stirred materials to soften without reaching the melting point (hence cited a solid-state process), allowing the traversing of the tool along the weld line in a plasticised tubular shaft of metal. As the pin is moved in the direction of welding, the leading face of the pin, assisted by a special pin profile, forces plasticised material to the back of the pin while applying a substantial forging force to consolidate the weld metal. The welding of the material is facilitated by severe plastic deformation in the solid state, involving dynamic recrystallization of the base material.

Microstructural features

|

| (B) The progress of the tool through the joint, also showing the weld zone and the region affected by the tool shoulder. |

The solid-state nature of the FSW process, combined with its unusual tool and asymmetric nature, results in a highly characteristic microstructure. The microstructure can be broken up into the following zones:

- The stir zone (also nugget, dynamically recrystallised zone) is a region of heavily deformed material that roughly corresponds to the location of the pin during welding. The grains within the stir zone are roughly equiaxed and often an order of magnitude smaller than the grains in the parent material. A unique feature of the stir zone is the common occurrence of several concentric rings which has been referred to as an "onion-ring" structure. The precise origin of these rings has not been firmly established, although variations in particle number density, grain size and texture have all been suggested.

- The flow arm zone is on the upper surface of the weld and consists of material that is dragged by the shoulder from the retreating side of the weld, around the rear of the tool, and deposited on the advancing side.

- The thermo-mechanically affected zone (TMAZ) occurs on either side of the stir zone. In this region the strain and temperature are lower and the effect of welding on the microstructure is correspondingly smaller. Unlike the stir zone the microstructure is recognizably that of the parent material, albeit significantly deformed and rotated. Although the term TMAZ technically refers to the entire deformed region it is often used to describe any region not already covered by the terms stir zone and flow arm.

- The Heat-Affected Zone (HAZ) is common to all welding processes. As indicated by the name, this region is subjected to a thermal cycle but is not deformed during welding. The temperatures are lower than those in the TMAZ but may still have a significant effect if the microstructure is thermally unstable. In fact, in age-hardened aluminium alloys this region commonly exhibits the poorest mechanical properties.

Advantages and disadvantages

The solid-state nature of FSW immediately leads to several advantages over fusion welding methods since any problems associated with cooling from the liquid phase are immediately avoided. Issues such as porosity, solute redistribution, solidification cracking and liquation cracking are not an issue during FSW. In general, FSW has been found to produce a low concentration of defects and is very tolerant to variations in parameters and materials.

Nevertheless, FSW is associated with a number of unique defects. Insufficient weld temperatures, due to low rotational speeds or high traverse speeds, for example, mean that the weld material is unable to accommodate the extensive deformation during welding. This may result in long, tunnel-like defects running along the weld which may occur on the surface or subsurface. Low temperatures may also limit the forging action of the tool and so reduce the continuity of the bond between the material from each side of the weld. The light contact between the material has given rise to the name "kissing-bond". This defect is particularly worrying since it is very difficult to detect using nondestructive methods such as X-ray or ultrasonic testing. If the pin is not long enough or the tool rises out of the plate then the interface at the bottom of the weld may not be disrupted and forged by the tool, resulting in a lack-of-penetration defect. This is essentially a notch in the material which can be a potent source of fatigue cracks.

A number of potential advantages of FSW over conventional fusion-welding processes have been identified:

- Good mechanical properties in the as welded condition

- Improved safety due to the absence of toxic fumes or the spatter of molten material.

- No consumables — A threaded pin made of conventional tool steel, e.g., hardened H13, can weld over 1000m of aluminium, and no filler or gas shield is required for aluminium.

- Easily automated on simple milling machines — lower setup costs and less training.

- Can operate in all positions (horizontal, vertical, etc.), as there is no weld pool.

- Generally good weld appearance and minimal thickness under/over-matching, thus reducing the need for expensive machining after welding.

- Low environmental impact.

However, some disadvantages of the process have been identified:

- Exit hole left when tool is withdrawn.

- Large down forces required with heavy-duty clamping necessary to hold the plates together.

- Less flexible than manual and arc processes (difficulties with thickness variations and non-linear welds).

- Often slower traverse rate than some fusion welding techniques, although this may be offset if fewer welding passes are required.

Important welding parameters

Tool rotation and traverse speeds

There are two tool speeds to be considered in friction-stir welding; how fast the tool rotates and how quickly it traverses the interface. These two parameters have considerable importance and must be chosen with care to ensure a successful and efficient welding cycle. The relationship between the welding speeds and the heat input during welding is complex but, in general, it can be said that increasing the rotation speed or decreasing the traverse speed will result in a hotter weld. In order to produce a successful weld it is necessary that the material surrounding the tool is hot enough to enable the extensive plastic flow required and minimise the forces acting on the tool. If the material is too cold then voids or other flaws may be present in the stir zone and in extreme cases the tool may break.

Excessively high heat input, on the other hand may be detrimental to the final properties of the weld. Theoretically, this could even result in defects due to the liquation of low-melting-point phases (similar to liquation cracking in fusion welds). These competing demands lead onto the concept of a "processing window": the range of processing parameters viz. tool rotation and traverse speed, that will produce a good quality weld. Within this window the resulting weld will have a sufficiently high heat input to ensure adequate material plasticity but not so high that the weld properties are excessively deteriorated.

Tool tilt and plunge depth

The plunge depth is defined as the depth of the lowest point of the shoulder below the surface of the welded plate and has been found to be a critical parameter for ensuring weld quality. Plunging the shoulder below the plate surface increases the pressure below the tool and helps ensure adequate forging of the material at the rear of the tool. Tilting the tool by 2-4 degrees, such that the rear of the tool is lower than the front, has been found to assist this forging process. The plunge depth needs to be correctly set, both to ensure the necessary downward pressure is achieved and to ensure that the tool fully penetrates the weld. Given the high loads required the welding machine may deflect and so reduce the plunge depth compared to the nominal setting, which may result in flaws in the weld. On the other hand an excessive plunge depth may result in the pin rubbing on the backing plate surface or a significant undermatch of the weld thickness compared to the base material. Variable load welders have been developed to automatically compensate for changes in the tool displacement while TWI have demonstrated a roller system that maintains the tool position above the weld plate.

Tool design

The design of the toos is a critical factor as a good tool can improve both the quality of the weld and the maximum possible welding speed. It is desirable that the tool material is sufficiently strong, tough and hard wearing, at the welding temperature. Further it should have a good oxidation resistance and a low thermal conductivity to minimise heat loss and thermal damage to the machinery further up the drive train. Hot-worked tool steel such as AISI H13 has proven perfectly acceptable for welding aluminium alloys within thickness ranges of 0.5 – 50 mm but more advanced tool materials are necessary for more demanding applications such as highly abrasive metal matrix composites or higher melting point materials such as steel or titanium.

Improvements in tool design have been shown to cause substantial improvements in productivity and quality. TWI has developed tools specifically designed to increase the depth of penetration and so increase the plate thickness that can be successfully welded. An example is the "whorl" design that uses a tapered pin with re-entrant features or a variable pitch thread in order to improve the downwards flow of material. Additional designs include the Triflute and Trivex series. The Triflute design has a complex system of three tapering, threaded re-entrant flutes that appear to increase material movement around the tool. The Trivex tools use a simpler, non-cylindrical, pin and have been found to reduce the forces acting on the tool during welding.

The majority of tools have a concave shoulder profile which acts as an escape volume for the material displaced by the pin, prevents material from extruding out of the sides of the shoulder and maintains downwards pressure and hence good forging of the material behind the tool. The Triflute tool uses an alternative system with a series of concentric grooves machined into the surface which are intended to produce additional movement of material in the upper layers of the weld.

Welding forces

During welding a number of forces will act on the tool:

- A downwards force is necessary to maintain the position of the tool at or below the material surface. Some friction-stir welding machines operate under load control but in many cases the vertical position of the tool is preset and so the load will vary during welding.

- The traverse force acts parallel to the tool motion and is positive in the traverse direction. Since this force arises as a result of the resistance of the material to the motion of the tool it might be expected that this force will decrease as the temperature of the material around the tool is increased.

- The lateral force may act perpendicular to the tool traverse direction and is defined here as positive towards the advancing side of the weld.

- Torque is required to rotate the tool, the amount of which will depend on the down force and friction coefficient (sliding friction) and/or the flow strength of the material in the surrounding region (sticking friction).

In order to prevent tool fracture and to minimize excessive wear and tear on the tool and associated machinery, the welding cycle should be modified so that the forces acting on the tool are as low as possible, and abrupt changes are avoided. In order to find the best combination of welding parameters it is likely that a compromise must be reached, since the conditions that favour low forces (e.g. high heat input, low travel speeds) may be undesirable from the point of view of productivity and weld properties.

Flow of material

Early work on the mode of material flow around the tool used inserts of a different alloy, which had a different contrast to the normal material when viewed through a microscope, in an effort to determine where material was moved as the tool passed. The data was interpreted as representing a form of in-situ extrusion where the tool, backing plate and cold base material form the "extrusion chamber" through which the hot, plasticised material is forced. In this model the rotation of the tool draws little or no material around the front of the pin instead the material parts in front of the pin and passes down either side. After the material has passed the pin the side pressure exerted by the "die" forces the material back together and consolidation of the join occurs as the rear of the tool shoulder passes overhead and the large down force forges the material.

More recently, an alternative theory has been advanced that advocates considerable material movement in certain locations. This theory holds that some material does rotate around the pin, for at least one rotation, and it is this material movement that produces the "onion-ring" structure in the stir zone. The researchers used a combination of thin Cu strip inserts and a "frozen pin" technique, where the tool is rapidly stopped in place. They suggested that material motion occurs by two processes:

- Material on the advancing front side of a weld enters into a zone that rotates and advances with the pin. This material was very highly deformed and sloughs off behind the pin to form arc-shaped features when viewed from above (i.e. down the tool axis). It was noted that the copper entered the rotational zone around the pin, where it was broken up into fragments. These fragments were only found in the arc shaped features of material behind the tool.

- The lighter material came from the retreating front side of the pin and was dragged around to the rear of the tool and filled in the gaps between the arcs of advancing side material. This material did not rotate around the pin and the lower level of deformation resulted in a larger grain size.

Generation and flow of heat

For any welding process it is, in general, desirable to increase the travel speed and minimise the heat input as this will increase productivity and possibly reduce the impact of welding on the mechanical properties of the weld. At the same time it is necessary to ensure that the temperature around the tool is sufficiently high to permit adequate material flow and prevent flaws or tool fracture.

When the traverse speed is increased, for a given heat input, there is less time for heat to conduct ahead of the tool and the thermal gradients are larger. At some point the speed will be so high that the material ahead of the tool will be too cold, and the flow stress too high, to permit adequate material movement, resulting in flaws or tool fracture. If the "hot zone" is too large then there is scope to increase the traverse speed and hence productivity.

The welding cycle can be split into several stages during which the heat flow and thermal profile will be different :

- Dwell. The material is preheated by a stationary, rotating tool in order to achieve a sufficient temperature ahead of the tool to allow the traverse. This period may also include the plunge of the tool into the workpiece.

- Transient heating. When the tool begins to move there will be a transient period where the heat production and temperature around the tool will alter in a complex manner until an essentially steady-state is reached.

- Pseudo steady-state. Although fluctuations in heat generation will occur the thermal field around the tool remains effectively constant, at least on the macroscopic scale.

- Post steady-state. Near the end of the weld heat may "reflect" from the end of the plate leading to additional heating around the tool.

Heat generation during friction-stir welding arises from two main sources: friction at the surface of the tool and the deformation of the material around the tool. The heat generation is often assumed to occur predominantly under the shoulder, due to its greater surface area, and to be equal to the power required to overcome the contact forces between the tool and the workpiece. The contact condition under the shoulder can be described by sliding friction, using a friction coefficient μ and interfacial pressure P, or sticking friction, based on the interfacial shear strength &tor; at an appropriate temperature and strain rate. Mathematical approximations for the total heat generated by the tool shoulder Qtotal have been developed using both sliding and sticking friction models :

Q total = 2/3 π P μω (R3 shoulder - R3 pin) (Sliding)

Q total = 2/3 π Tau μω (R3 shoulder - R3 pin) (Sticking)

Q total = 2/3 π Tau μω (R3 shoulder - R3 pin) (Sticking)

where ω is the angular velocity of the tool, Rshoulder is the radius of the tool shoulder and Rpin that of the pin. Several other equations have been proposed to account for factors such as the pin but the general approach remains the same.

A major difficulty in applying these equations is determining suitable values for the friction coefficient or the interfacial shear stress. The conditions under the tool are both extreme and very difficult to measure. To date, these parameters have been used as "fitting parameters" where the model works back from measured thermal data to obtain a reasonable simulated thermal field. While this approach is useful for creating process models to predict, for example, residual stresses it is less useful for providing insights into the process itself.

Applications

The FSW process is currently patented by TWI in most industrialised countries and licensed for over 183 users. Friction stir welding and its variants friction stir spot welding and friction stir processing are used for the following industrial applications:

Shipbuilding and Offshore

Two Scandinavian aluminium extrusion companies were in 1996 the first, who applied FSW commercially to the manufacture of fish freezer panels at Sapa, as well as deck panels and helicopter landing platforms at Marine Aluminium Aanensen, which subsequently merged with Hydro Aluminium Maritime to become Hydro Marine Aluminium. Some of these freezer panels are now also produced by Riftec and Bayards. In 1997 two-dimensional friction stir welds in the hydrodynamically flared bow section of the hull of the ocean viewer vessel The Boss were produced at Research Foundation Institute with the first portable FSW machine. The Super Liner Ogasawara at Mitsui Engineering and Shipbuilding is the largest friction stir welded ship so far. The Sea Fighter of Nichols Bros and the Freedom class Littoral Combat Ships contain prefabricated panels by the FSW fabricators Advanced Technology and Friction Stir Link respectively. The Houbei class missile boat has friction stir welded rocket launch containers of China Friction Stir Centre. The HMNZS Rotoiti in New Zealand has FSW panels made by Donovans in a converted milling machine. Various companies apply FSW to armor plating for amphibious assault ships

| |

|

Boeing applies FSW to the Delta II and Delta IV expendable launch vehicles, and the first of these with a friction stir welded Interstage module has been launched in 1999. The process is also used for the Space Shuttle external tank, for Ares I and for the Orion Crew Vehicle test article at NASA as well as Falcon 1 and Falcon 9 rockets at SpaceX. The toe nails for ramp of Boeing C-17 Globemaster III cargo aircraft by Advanced Joining Technologies and the cargo barrier beams for the Boeing 747 Large Cargo Freighter were the first commercially produced aircraft parts. FAA approved wings and fuselage panels of the Eclipse 500 aircraft were made at Eclipse Aviation, and this company delivered 259 friction stir welded business jets, before they were forced into Chapter 7 liquidation. Floor panels for Airbus A400M military aircraft are now made by Pfalz Flugzeugwerke and Embraer used FSW for the Legacy 450 and 500 Jets

|

| The centre tunnel of the Ford GT is made from two aluminium extrusions friction stir welded to a bent aluminium sheet and houses the fuel tank |

Aluminium engine cradles and suspension struts for stretched Lincoln Town Car were the first automotive parts that were friction stir at Tower Automotive, who use the process also for the engine tunnel of the Ford GT. A spin-off of this company is called Friction Stir Link and successfully exploits the FSW process, e.g. for the flatbed trailer "Revolution" of Fontaine Trailers. In Japan FSW is applied to suspension struts at Showa Denko and for joining of aluminium sheets to galvanized steel brackets for the boot lid of the Mazda MX-5. Friction stir spot welding is successfully used for the bonnet and rear doors of the Mazda RX-8 and the boot lid of the Toyota Prius. Wheels are friction stir welded at Simmons Wheels, UT Alloy Works and Fundo Rear seats for the Volvo V70 are friction stir welded at Sapa, HVAC pistons at Halla Climate Control and exhaust gas recirculation coolers at Pierburg. Tailor welded blanks are friction stir welded for the Audi R8 at Riftec. The B-column of the Audi R8 Spider is friction stir welded from two extrusions at Hammerer Aluminium Industries in Austria.

|

| The high-strength low-distortion body of Hitachi's A-train British Rail Class 395 is friction stir welded from longitudinal aluminium extrusions |

Since 1997 roof panels were made from aluminium extrusions at Hydro Marine Aluminium with a bespoke 25m long FSW machine, e.g. for DSB class SA-SD trains of Alstom LHB Curved side and roof panels for the Victoria Line trains of London Underground, side panels for Bombardier's Electrostar trains at Sapa Group and side panels for Alstom's British Rail Class 390 Pendolino trains are made at Sapa Group Japanese commuter and express A-trains and British Rail Class 395 trains are friction stir welded by Hitachi, while Kawasaki applies friction stir spot welding to roof panels and Sumitomo Light Metal produces Shinkansen floor panels. Innovative FSW floor panels are made by Hammerer Aluminium Industries in Austria for the Stadler DOSTO double decker rail cars, to obtain an internal height of 2 m on both floors.

Heat sinks for cooling high-power electronics of locomotives are made at Sykatek, EBG, Austerlitz Electronics, EuroComposite, Sapa and Rapid Technic, and are the most common application of FSW due to the excellent heat transfer. The FSW process is also used for IGBT coolers at Sapa Group.

Façade panels and athode sheets are friction stir welded at AMAG and Hammerer Aluminium Industries including friction stir lap welds of copper to aluminium. Bizerba#s meat slicers, Ökolüfter HVAC units and Siemens X-ray vacuum vessels are friction stir welded at Riftec. Vacuum valves and vessels are made by FSW at Japanese and Swiss companies. FSW is also used for the encapsulation of nuclear waste at SKB in 50mm thick copper canisters. Pressure vessels from ø1m semispherical forgings of 38.1mm thick aluminium alloy 2219 at Advanced Joining Technologies and Lawrence Livermore Nat Lab. Friction stir processing is applied to ship propellers at Friction Stir Link and to hunting knives by DiamondBlade.

Composites

Composites or composite materials are a combination of materials which provide different physical characteristics than either material separately. Composite material research within mechanical engineering typically focuses on designing (and, subsequently, finding applications for) stronger or more rigid materials while attempting to reduce weight, susceptibility to corrosion, and other undesirable factors. Carbon fiber reinforced composites, for instance, have been used in such diverse applications as spacecraft and fishing rods.

Composite materials, often shortened to composites, are engineered or naturally occurring materials

The very common example would be disc brake pads, which consists of hard ceramic particles embedded in soft metal matrix. Those composites closest to our personal hygiene form our shower stalls and bathtubs made of fibreglass. Imitation granite and cultured marble sinks and countertops are widely used. The most advanced examples perform routinely on spacecraft in demanding environments.

In contrast, isotropic materials (for example, aluminium or steel), in standard wrought forms, typically have the same stiffness regardless of the directional orientation of the applied forces and/or moments.

The relationship between forces/moments and strains/curvatures for an isotropic material can be described with the following material properties: Young's Modulus, the shear Modulus and the Poisson's ratio, in relatively simple mathematical relationships. For the anisotropic material, it requires the mathematics of a second order tensor and up to 21 material property constants. For the special case of orthogonal isotropy, there are three different material property constants for each of Young's Modulus, Shear Modulus and Poisson's ratio—a total of 9 constants to describe the relationship between forces/moments and strains/curvatures.

Techniques that take advantage of the anisotropic properties of the materials include mortise and tenon joints (in natural composites such as wood) and Pi Joints in synthetic composites.

Polyester resin tends to have yellowish tint, and is suitable for most backyard projects. Its weaknesses are that it is UV sensitive and can tend to degrade over time, and thus generally is also coated to help preserve it. It is often used in the making of surfboards and for marine applications. Its hardener is a MEKP, and is mixed at 14 drops per oz. MEKP is composed of methyl ethyl ketone peroxide, a catalyst. When MEKP is mixed with the resin, the resulting chemical reaction causes heat to build up and cure or harden the resin.

Vinylester resin tends to have a purplish to bluish to greenish tint. This resin has lower viscosity than polyester resin, and is more transparent. This resin is often billed as being fuel resistant, but will melt in contact with gasoline. This resin tends to be more resistant over time to degradation than polyester resin, and is more flexible. It uses the same hardener as polyester resin (at the same mix ratio) and the cost is approximately the same.

Epoxy resin is almost totally transparent when cured. In the aerospace industry, epoxy is used as a structural matrix material or as a structural glue.

Shape memory polymer (SMP) resins have varying visual characteristics depending on their formulation. These resins may be epoxy-based, which can be used for auto body and outdoor equipment repairs; cyanate-ester-based, which are used in space applications; and acrylate-based, which can be used in very cold temperature applications, such as for sensors that indicate whether perishable goods have warmed above a certain maximum temperature. These resins are unique in that their shape can be repeatedly changed by heating above their glass transition temperature (Tg). When heated, they become flexible and elastic, allowing for easy configuration. Once they are cooled, they will maintain their new shape. The resins will return to their original shapes when they are reheated above their Tg. The advantage of shape memory polymer resins is that they can be shaped and reshaped repeatedly without losing their material properties, and these resins can be used in fabricating shape memory composites.

Fiber-reinforced composite materials can be divided into two main categories normally referred to as short fiber-reinforced materials and continuous fiber-reinforced materials. Continuous reinforced materials will often constitute a layered or laminated structure. The woven and continuous fibre styles are typically available in a variety of forms, being pre-impregnated with the given matrix (resin), dry, uni-directional tapes of various widths, plain weave, harness satins, braided, and stitched.

The short and long fibers are typically employed in compression moulding and sheet moulding operations. These come in the form of flakes, chips, and random mate (which can also be made from a continuous fibre laid in random fashion until the desired thickness of the ply / laminate is achieved).

Composites can fail on the microscopic or macroscopic scale. Compression failures can occur at both the macro scale or at each individual reinforcing fibre in compression buckling. Tension failures can be net section failures of the part or degradation of the composite at a microscopic scale where one or more of the layers in the composite fail in tension of the matrix or failure the bond between the matrix and fibres.

Some composites are brittle and have little reserve strength beyond the initial onset of failure while others may have large deformations and have reserve energy absorbing capacity past the onset of damage. The variations in fibres and matrices that are available and the mixtures that can be made with blends leave a very broad range of properties that can be designed into a composite structure. The best known failure of a brittle ceramic matrix composite occurred when the carbon-carbon composite tile on the leading edge of the wing of the Space Shuttle Columbia fractured when impacted during take-off. It led to catastrophic break-up of the vehicle when it re-entered the Earth's atmosphere on 1 February 2003.

Compared to metals, composites have relatively poor bearing strength.

Shape memory polymer composites are high-performance composites, formulated using fibre or fabric reinforcement and shape memory polymer resin as the matrix. Since a shape memory polymer resin is used as the matrix, these composites have the ability to be easily manipulated into various configurations when they are heated above their activation temperatures and will exhibit high strength and stiffness at lower temperatures. They can also be reheated and reshaped repeatedly without losing their material properties. These composites are ideal for applications such as lightweight, rigid, deployable structures; rapid manufacturing; and dynamic reinforcement.

Composites can also use metal fibres reinforcing other metals, as in metal matrix composites or MMC. The benefit of magnesium is that it does not degrade in outer space. Ceramic matrix composites include bone (hydroxyapatite reinforced with collagen fibres), Cermet (ceramic and metal) and concrete. Ceramic matrix composites are built primarily for fracture toughness, not for strength. Organic matrix/ceramic aggregate composites include asphalt concrete, mastic asphalt, mastic roller hybrid, dental composite, syntactic foam and mother of pearl. Chobham armour is a special type of composite armour used in military applications.

Additionally, thermoplastic composite materials can be formulated with specific metal powders resulting in materials with a density range from 2 g/cm³ to 11 g/cm³ (same density as lead). The most common name for this type of material is High Gravity Compound (HGC), although Lead Replacement is also used. These materials can be used in place of traditional materials such as aluminium, stainless steel, brass, bronze, copper, lead, and even tungsten in weighting, balancing (for example, modifying the centre of gravity of a tennis racquet), vibration dampening, and radiation shielding applications. High density composites are an economically viable option when certain materials are deemed hazardous and are banned (such as lead) or when secondary operations costs (such as machining, finishing, or coating) are a factor.

Engineered wood includes a wide variety of different products such as wood fibre board, plywood, oriented strand board, wood plastic composite (recycled wood fibre in polyethylene matrix), Pykrete (sawdust in ice matrix), Plastic-impregnated or laminated paper or textiles, Arborite, Formica (plastic) and Micarta. Other engineered laminate composites, such as Mallite, use a central core of end grain balsa wood, bonded to surface skins of light alloy or GRP. These generate low-weight, high rigidity materials.

Carbon composite is a key material in today's launch vehicles and heat shields for the re-entry phase of spacecraft. It is widely used in solar panel substrates, antenna reflectors and yokes of spacecraft. It is also used in payload adapters, inter-stage structures and heat shields of launch vehicles. Furthermore disk brake systems of airplanes and racing cars are using carbon/carbon material, and the composite material with carbon fibers and silicon carbide matrix has been introduced in luxury vehicles and sports cars.

In 2007, an all-composite military Humvee was introduced by TPI Composites Inc and Armor Holdings Inc, the first all-composite military vehicle. By using composites the vehicle is lighter, allowing higher payloads. In 2008, carbon fiber and DuPont Kevlar (five times stronger than steel) were combined with enhanced thermoset resins to make military transit cases by ECS Composites creating 30-percent lighter cases with high strength.

Many composite layup designs also include a co-curing or post-curing of the prepreg with various other mediums, such as honeycomb or foam. This is commonly called a sandwich structure. This is a more common layup process for the manufacture of radomes, doors, cowlings, or non-structural parts.

The finishing of the composite parts is also critical in the final design. Many of these finishes will include rain-erosion coatings or polyurethane coatings.

Composite materials, often shortened to composites, are engineered or naturally occurring materials

|

| A cloth of woven carbon fiber filaments, a common element in composite materials |

made from two or more constituent materials with significantly different physical or chemical properties which remain separate and distinct at the macroscopic or microscopic scale within the finished structure.

Tooling

Some types of tooling materials used in the manufacturing of composites structures include invar, steel, aluminium, reinforced silicone rubber, nickel, and carbon fibre. Selection of the tooling material is typically based on, but not limited to, the coefficient of thermal expansion, expected number of cycles, end item tolerance, desired or required surface condition, method of cure, glass transition temperature of the material being moulded, moulding method, matrix, cost and a variety of other considerations.Properties

Mechanics

The physical properties of composite materials are generally not isotropic (independent of direction of applied force) in nature, but rather are typically orthotropic (different depending on the direction of the applied force or load). For instance, the stiffness of a composite panel will often depend upon the orientation of the applied forces and/or moments. Panel stiffness is also dependent on the design of the panel. For instance, the fibre reinforcement and matrix used, the method of panel build, thermoset versus thermoplastic, type of weave, and orientation of fibre axis to the primary force.In contrast, isotropic materials (for example, aluminium or steel), in standard wrought forms, typically have the same stiffness regardless of the directional orientation of the applied forces and/or moments.

The relationship between forces/moments and strains/curvatures for an isotropic material can be described with the following material properties: Young's Modulus, the shear Modulus and the Poisson's ratio, in relatively simple mathematical relationships. For the anisotropic material, it requires the mathematics of a second order tensor and up to 21 material property constants. For the special case of orthogonal isotropy, there are three different material property constants for each of Young's Modulus, Shear Modulus and Poisson's ratio—a total of 9 constants to describe the relationship between forces/moments and strains/curvatures.

Techniques that take advantage of the anisotropic properties of the materials include mortise and tenon joints (in natural composites such as wood) and Pi Joints in synthetic composites.

Resins

Typically, most common composite materials, including fiberglass, carbon fiber, and Kevlar, include at least two parts, the substrate and the resin.Polyester resin tends to have yellowish tint, and is suitable for most backyard projects. Its weaknesses are that it is UV sensitive and can tend to degrade over time, and thus generally is also coated to help preserve it. It is often used in the making of surfboards and for marine applications. Its hardener is a MEKP, and is mixed at 14 drops per oz. MEKP is composed of methyl ethyl ketone peroxide, a catalyst. When MEKP is mixed with the resin, the resulting chemical reaction causes heat to build up and cure or harden the resin.

Vinylester resin tends to have a purplish to bluish to greenish tint. This resin has lower viscosity than polyester resin, and is more transparent. This resin is often billed as being fuel resistant, but will melt in contact with gasoline. This resin tends to be more resistant over time to degradation than polyester resin, and is more flexible. It uses the same hardener as polyester resin (at the same mix ratio) and the cost is approximately the same.

Epoxy resin is almost totally transparent when cured. In the aerospace industry, epoxy is used as a structural matrix material or as a structural glue.

Shape memory polymer (SMP) resins have varying visual characteristics depending on their formulation. These resins may be epoxy-based, which can be used for auto body and outdoor equipment repairs; cyanate-ester-based, which are used in space applications; and acrylate-based, which can be used in very cold temperature applications, such as for sensors that indicate whether perishable goods have warmed above a certain maximum temperature. These resins are unique in that their shape can be repeatedly changed by heating above their glass transition temperature (Tg). When heated, they become flexible and elastic, allowing for easy configuration. Once they are cooled, they will maintain their new shape. The resins will return to their original shapes when they are reheated above their Tg. The advantage of shape memory polymer resins is that they can be shaped and reshaped repeatedly without losing their material properties, and these resins can be used in fabricating shape memory composites.

Categories of fiber-reinforced composite materials

|

| Typologies of fibre-reinforced composite materials: a) continuous fibre-reinforced b) discontinuous aligned fibre-reinforced c) discontinuous random-oriented fibre-reinforced. |

The short and long fibers are typically employed in compression moulding and sheet moulding operations. These come in the form of flakes, chips, and random mate (which can also be made from a continuous fibre laid in random fashion until the desired thickness of the ply / laminate is achieved).

Failure

Shock, impact, or repeated cyclic stresses can cause the laminate to separate at the interface between two layers, a condition known as delamination. Individual fibres can separate from the matrix e.g. fibre pull-out.Composites can fail on the microscopic or macroscopic scale. Compression failures can occur at both the macro scale or at each individual reinforcing fibre in compression buckling. Tension failures can be net section failures of the part or degradation of the composite at a microscopic scale where one or more of the layers in the composite fail in tension of the matrix or failure the bond between the matrix and fibres.

Some composites are brittle and have little reserve strength beyond the initial onset of failure while others may have large deformations and have reserve energy absorbing capacity past the onset of damage. The variations in fibres and matrices that are available and the mixtures that can be made with blends leave a very broad range of properties that can be designed into a composite structure. The best known failure of a brittle ceramic matrix composite occurred when the carbon-carbon composite tile on the leading edge of the wing of the Space Shuttle Columbia fractured when impacted during take-off. It led to catastrophic break-up of the vehicle when it re-entered the Earth's atmosphere on 1 February 2003.

Compared to metals, composites have relatively poor bearing strength.

Testing

To aid in predicting and preventing failures, composites are tested before and after construction. Pre-construction testing may use finite element analysis (FEA) for ply-by-ply analysis of curved surfaces and predicting wrinkling, crimping and dimpling of composites. Materials may be tested after construction through several nondestructive methods including ultrasonics, thermography, shearography and X-ray radiography.Materials

Fibre-reinforced polymers or FRPs include wood (comprising cellulose fibres in a lignin and hemicellulose matrix), carbon-fibre reinforced plastic or CFRP, and glass-reinforced plastic or GRP. If classified by matrix then there are thermoplastic composites, short fibre thermoplastics, long fibre thermoplastics or long fibre-reinforced thermoplastics. There are numerous thermoset composites, but advanced systems usually incorporate aramid fibre and carbon fibre in an epoxy resin matrix.Shape memory polymer composites are high-performance composites, formulated using fibre or fabric reinforcement and shape memory polymer resin as the matrix. Since a shape memory polymer resin is used as the matrix, these composites have the ability to be easily manipulated into various configurations when they are heated above their activation temperatures and will exhibit high strength and stiffness at lower temperatures. They can also be reheated and reshaped repeatedly without losing their material properties. These composites are ideal for applications such as lightweight, rigid, deployable structures; rapid manufacturing; and dynamic reinforcement.

Composites can also use metal fibres reinforcing other metals, as in metal matrix composites or MMC. The benefit of magnesium is that it does not degrade in outer space. Ceramic matrix composites include bone (hydroxyapatite reinforced with collagen fibres), Cermet (ceramic and metal) and concrete. Ceramic matrix composites are built primarily for fracture toughness, not for strength. Organic matrix/ceramic aggregate composites include asphalt concrete, mastic asphalt, mastic roller hybrid, dental composite, syntactic foam and mother of pearl. Chobham armour is a special type of composite armour used in military applications.

Additionally, thermoplastic composite materials can be formulated with specific metal powders resulting in materials with a density range from 2 g/cm³ to 11 g/cm³ (same density as lead). The most common name for this type of material is High Gravity Compound (HGC), although Lead Replacement is also used. These materials can be used in place of traditional materials such as aluminium, stainless steel, brass, bronze, copper, lead, and even tungsten in weighting, balancing (for example, modifying the centre of gravity of a tennis racquet), vibration dampening, and radiation shielding applications. High density composites are an economically viable option when certain materials are deemed hazardous and are banned (such as lead) or when secondary operations costs (such as machining, finishing, or coating) are a factor.

Engineered wood includes a wide variety of different products such as wood fibre board, plywood, oriented strand board, wood plastic composite (recycled wood fibre in polyethylene matrix), Pykrete (sawdust in ice matrix), Plastic-impregnated or laminated paper or textiles, Arborite, Formica (plastic) and Micarta. Other engineered laminate composites, such as Mallite, use a central core of end grain balsa wood, bonded to surface skins of light alloy or GRP. These generate low-weight, high rigidity materials.

Products

Composite materials have gained popularity (despite their generally high cost) in high-performance products that need to be lightweight, yet strong enough to take harsh loading conditions such as aerospace components (tails, wings, fuselages, propellers), boat and scull hulls, bicycle frames and racing car bodies. Other uses include fishing rods, storage tanks, and baseball bats. The new Boeing 787 structure including the wings and fuselage is composed largely of composites. Composite materials are also becoming more common in the realm of orthopedic surgery.Carbon composite is a key material in today's launch vehicles and heat shields for the re-entry phase of spacecraft. It is widely used in solar panel substrates, antenna reflectors and yokes of spacecraft. It is also used in payload adapters, inter-stage structures and heat shields of launch vehicles. Furthermore disk brake systems of airplanes and racing cars are using carbon/carbon material, and the composite material with carbon fibers and silicon carbide matrix has been introduced in luxury vehicles and sports cars.

In 2007, an all-composite military Humvee was introduced by TPI Composites Inc and Armor Holdings Inc, the first all-composite military vehicle. By using composites the vehicle is lighter, allowing higher payloads. In 2008, carbon fiber and DuPont Kevlar (five times stronger than steel) were combined with enhanced thermoset resins to make military transit cases by ECS Composites creating 30-percent lighter cases with high strength.

Many composite layup designs also include a co-curing or post-curing of the prepreg with various other mediums, such as honeycomb or foam. This is commonly called a sandwich structure. This is a more common layup process for the manufacture of radomes, doors, cowlings, or non-structural parts.

The finishing of the composite parts is also critical in the final design. Many of these finishes will include rain-erosion coatings or polyurethane coatings.

Mechatronics

Mechatronics is the combination of Mechanical engineering, Electronic engineering, Computer engineering, Software engineering, Control engineering, and Systems Design engineering in order to design, and manufacture useful products. Mechatronics is a multidisciplinary field of engineering, that is to say it rejects splitting engineering into separate disciplines. Originally, mechatronics just included the combination between mechanics and electronics, hence the word is only a portmanteau of mechanics and electronics. However, as technical systems has become more and more complex the word has been "updated" during recent years to include more technical areas.

French standard NF E 01-010 gives the following definition: “approach aiming at the synergistic integration of mechanics, electronics, control theory, and computer science within product design and manufacturing, in order to improve and/or optimize its functionality".Description

A mechatronics engineer unites the principles of mechanics, electronics, and computing to generate a simpler, more economical and reliable system. Mechatronics is centered on mechanics, electronics, computing, control engineering, molecular engineering (from nanochemistry and biology), and optical engineering, which, combined, make possible the generation of simpler, more economical, reliable and versatile systems. The portmanteau "mechatronics" was coined by Tetsuro Mori, the senior engineer of the Japanese company Yaskawa in 1969. An industrial robot is a prime example of a mechatronics system; it includes aspects of electronics, mechanics, and computing to do its day-to-day jobs.Engineering cybernetics deals with the question of control engineering of mechatronic systems. It is used to control or regulate such a system (see control theory). Through collaboration, the mechatronic modules perform the production goals and inherit flexible and agile manufacturing properties in the production scheme. Modern production equipment consists of mechatronic modules that are integrated according to a control architecture. The most known architectures involve hierarchy, polyarchy, heterarchy, and hybrid. The methods for achieving a technical effect are described by control algorithms, which might or might not utilize formal methods in their design. Hybrid systems important to mechatronics include production systems, synergy drives, planetary exploration rovers, automotive subsystems such as anti-lock braking systems and spin-assist, and every-day equipment such as autofocus cameras, video, hard disks, and CD players.

Course structure

Mechatronic students take courses from across the various fields listed below:

- Mechanical engineering and materials science subjects

- Electronic engineering subjects

- Computer engineering subjects

- Computer science subjects

- Systems and control engineering subjects

- Optomechanics (optical engineering) subjects

- Robotics subjects

Application

- Machine vision

- Automation and robotics

- Servo-mechanics

- Sensing and control systems

- Automotive engineering, automotive equipment in the design of subsystems such as anti-lock braking systems

- Computer-machine controls, such as computer driven machines like IE CNC milling machines

- Expert systems

- Industrial goods

- [Consumer products]

- Mechatronics systems

- Medical mechatronics,medical imaging systems

- Structural dynamic systems

- Transportation and vehicular systems

- Mechatronics as the new language of the automobile

- Diagnostic, reliability, and control system techniques

- Computer aided and integrated manufacturing systems

- Computer-aided design

- Engineering and manufacturing systems

- Packaging

Physical implementations

For most mechatronic systems, the main issue is no more how to implement a control system, but how to implement actuators and what is the energy source. Within the mechatronic field, mainly two technologies are used to produce the movement: the piezo-electric actuators and motors, or the electromagnetic actuators and motors. Maybe the most famous mechatronics systems are the well known camera autofocus system or camera anti-shake systems.

Concerning the energy sources, most of the applications use batteries. But a new trend is arriving and is the energy harvesting, allowing transforming into electricity mechanical energy from shock, vibration, or thermal energy from thermal variation, and so on.

Variant of the field